I’m back from a very interesting Workshop on Federated Learning and Analytics that was organized by Peter Kairouz and Brendan McMahan from Google’s federated learning team and was held at Google Seattle.

For the first part of my talk, I covered Bargav’s work on evaluating differentially private machine learning, but I reserved the last few minutes of my talk to address the cognitive dissonance I felt being at a Google meeting on privacy.

I don’t want to offend anyone, and want to preface this by saying I have lots of friends and former students who work for Google, people that I greatly admire and respect – so I want to raise the cognitive dissonance I have being at a “privacy” meeting run by Google, in the hopes that people at Google actually do think about privacy and will able to convince me how wrong I am.

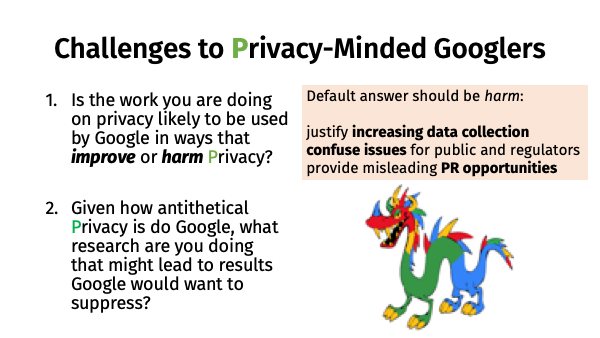

But, it is necessary to address the elephant in the room — we are at a privacy meeting organized by Google.

It may be a cute, colorful, and even non-evil Dragon, but it has a huge appetite!

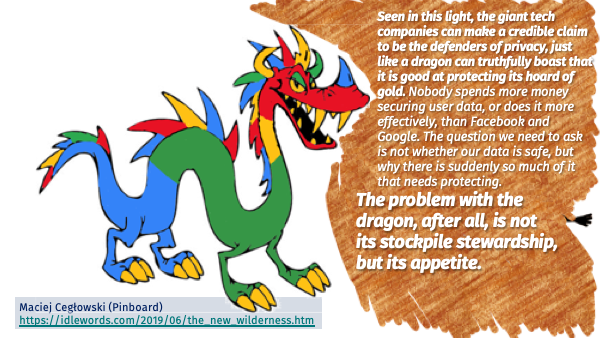

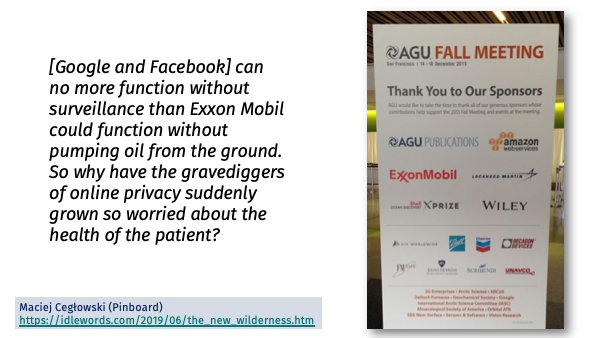

This quote is from an essay by Maciej Cegłowski (the founder of Pinboard), The New Wilderness:

Seen in this light, the giant tech companies can make a credible claim to be the defenders of privacy, just like a dragon can truthfully boast that it is good at protecting its hoard of gold. Nobody spends more money securing user data, or does it more effectively, than Facebook and Google.

The question we need to ask is not whether our data is safe, but why there is suddenly so much of it that needs protecting. The problem with the dragon, after all, is not its stockpile stewardship, but its appetite.

We’re also working hard to challenge the assumption that products need more data to be more helpful. Data minimization is an important privacy principle for us, and we’re encouraged by advances developed by Google A.I. researchers called “federated learning.” It allows Google’s products to work better for everyone without collecting raw data from your device. ... In the future, A.I. will provide even more ways to make products more helpful with less data.

Even as we make privacy and security advances in our own products, we know the kind of privacy we all want as individuals relies on the collaboration and support of many institutions, like legislative bodies and consumer organizations.

Maciej's essay was partly inspired by the recent New York Times opinion piece by Google's CEO: Google’s Sundar Pichai: Privacy Should Not Be a Luxury Good.

If you haven’t read it, you should. It is truly a masterpiece in obfuscation and misdirection.

Pichai somehow makes the argument that privacy and equity are in conflict, and that Google's industrial-scale surveillance model is necessary to make its products accessible to poor people.

The piece also highlights the work the team here has done on federated learning — terrific visibility and recognition of the value of the research, but notably, right before getting into discussion about government privacy regulation.

The question I want to raise for the Google researchers and engineers working on privacy, is what is the actual purpose of this work for the company?

I distinguish small "p" privacy from big "P" Privacy.

Small "p" privacy is about protecting corporate data from outsiders. This used to be called confidentiality. If you only believe in small "p" privacy, there is no difficultly in justifying working on privacy at Google.

Big "P" Privacy views privacy as an individual human right, and even more, as a societal value. Maciej calls this ambient privacy. It is hard to quantify or even understand what we lose when we give up Privacy as individuals and as a society, but the thought of living in a society where everyone is under constant surveillance strikes me as terrifying and dystopian.

So, if you believe in Privacy, and are working on privacy at Google, you should consider whether the purpose (for the company) of your work is to improve or harm Privacy.

Given the nature or Google's business, you should start from the assumption that its purpose is probably to harm Privacy, and be self-critical in your arguments to convince yourself that it is to improve Privacy.

There are many ways technically sound and successful work on improving privacy could be used to actually harm Privacy. For example,

- Technical mechanisms for privacy can be used to jusfify collecting more data. Collecting more data is harmful to Privacy even if it is done in a way that protects individual privacy and ensures that sensitive data about individuals cannot be inferred. And that's the best case — it assumes everything is implemented perfectly with no technical mistakes or bugs in the code, and that parameters are set in ways that provide sufficient privacy, even when this means accepting unsatisfactory utility.

- Privacy work can be used by companies to delay, mislead, and confuse regulators, and to provide public relations opportunities that primarily serve to confuse and mislead the public. There can, of course, be beneficial publicity from privacy research, but its important to realize that not all publicity is good publicity, especially when it comes to how companies use privacy research.

Maciej’s essay draws an analogy between Google’s interest in privacy, and the energy industry’s interest in pollution. I’ll make a slightly different analogy here, focusing on the role of scientists and engineers at these companies.

Of course, comparing Google to poison pushers and destroyers of the planet is grossly unfair.

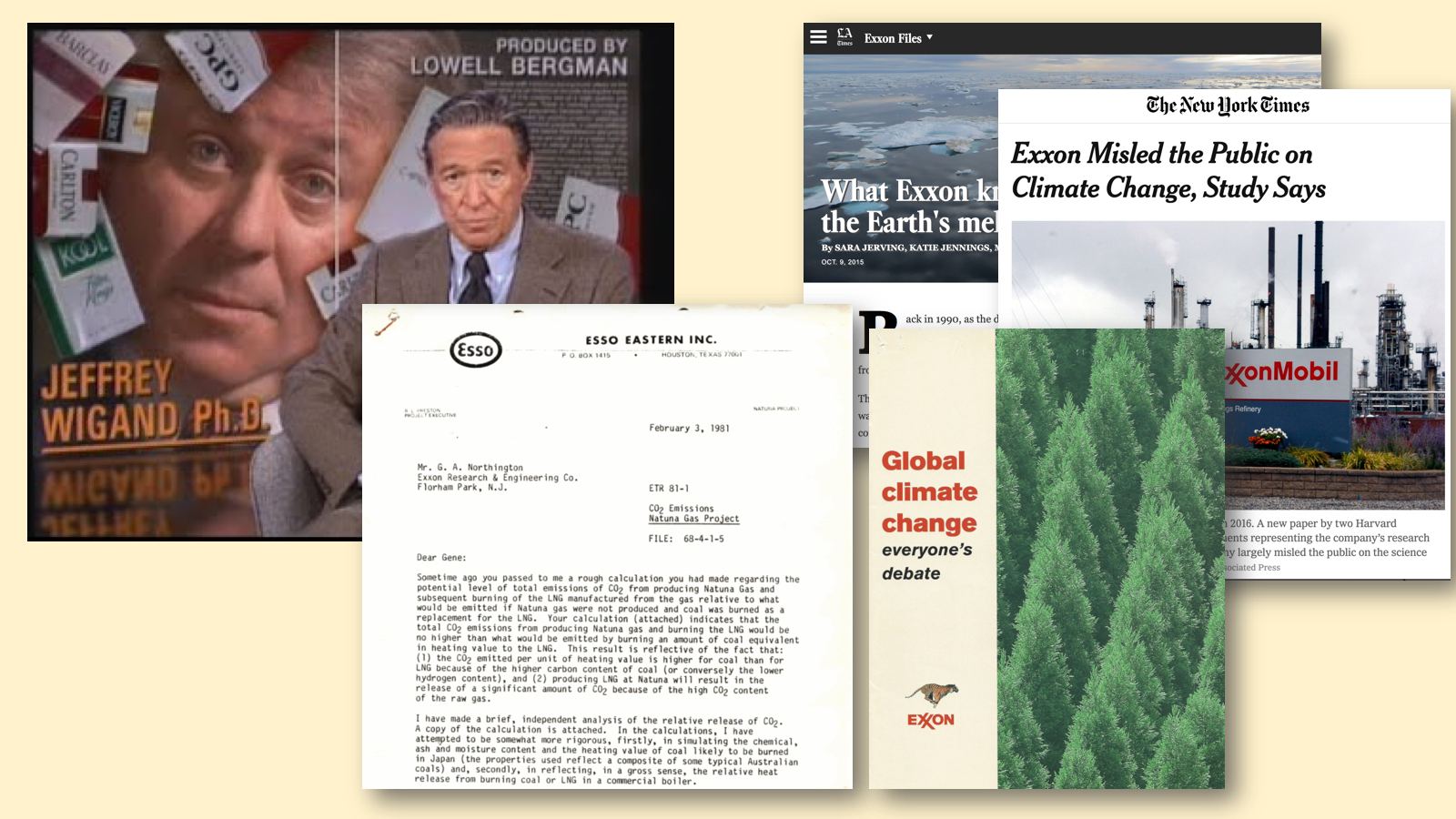

Tobacco Executives testifying to House Energy and Commerce Subcommittee on Health and the Environment that Cigarettes are not Addictive, April 1994

For one thing, when congress called the tobacco executives to account to the public for their behavior, they actually showed up.

I’m certainly not here to defend tobacco company executives, though. The more relevant comparison is to the scientists who worked at these companies.

The tobacco and fossil fuel companies had good scientists, who did work to understand the impact of their industry. Some of those scientists reached conclusions that were problematic for their companies. Their companies suppressed or distorted those results, and emphasized their investments in science in glossy brochures to influence public policy and opion.

So, my second challenge to engineers and researchers at Google who value Privacy, is do be doing work that potentially could lead to results the company would want to suppress.

This doesn't mean doing work that is hostile to Google (recall that Wigand's project at Brown & Williamson Tobacco was to develop a safer cigarette). But it does mean doing research to understand the scale and scope of privacy loss resulting from Google's products, and to measure its impact on individual behavior and society.

Google’s researchers are uniquely well positioned to do this type of research — they have the technical expertise and talent, access to data and resources, and opportunity to do large scale experiments.

Reactions

I was a bit worried about giving this talk to an audience at Google (about 40 Googlers and 40 academic researchers in the audience, as well as a live stream that I know some people elsewhere at Google were watching), especially with a cruise on Lake Washington later in the day. But, all the reactions I got were very encouraging and positive, with great willingness from the Googlers to consider how people outside might perceive their company and interest in thinking about ways they can do better.

My impression is the engineers and researchers at Google do care about Privacy, and have some opportunities to influence corporate decisions, but it is a large and complex company. From the way academics (especially cryptographers) reason about systems, once you trust Google to provide your hardware or operating system they are a trusted party and can easily access and control everything. From a complex corporate perspective, there are big difference between data on your physical device (even if it was built by Google), in a database at Google, and stored in an encrypted form with privacy noise, even if all the code doing this is written and controlled by the same organization that has full access to the data. Lots of the privacy work at Google is motivated by reducing the internal attack surfaces, so sensitive data is exposed to less code and people within the organization. This makes sense, at least for small p privacy.

There is a privacy review board at Google (mandated by an FTC consent agreement) that conducts a privacy review of all products and can go back to engineering teams with requests for changes (and possibly even prevent a product from being launched, although Googlers were murky on how much power they would have when things come down to it). On the other hand, the privacy review is done by Google employees, who, however well meaning and ethical they are, are still beholden to their employer. This strikes me as a positive, but more like the team-employed doctors do administer the concussion protocol during football games. (Unfortunately, Google’s efforts to set up an external ethics board did not go well.)

On the whole, though, I am encouraged by the discussions with the Google researchers, that there is some awareness of the complexities in working on privacy at Google, and that scientists and engineers there can provide some counter-balance to the dragon’s appetite.

This is a wonderful talk from @UdacityDave at the University of Virginia, delivered at Google, that touches on the fundamental ethical conflict of working on privacy technologies for a surveillance giant. https://t.co/ucPezrSuTB

— Pinboard (@Pinboard) June 25, 2019