New Classes Explore Promise and Predicaments of Artificial Intelligence

The Docket (UVA Law News) has an article about the AI Law class I’m helping Tom Nachbar teach:

New Classes Explore Promise and Predicaments of Artificial Intelligence

Attorneys-in-Training Learn About Prompts, Policies and Governance

The Docket, 17 March 2025

Nachbar teamed up with David Evans, a professor of computer science at UVA, to teach the course, which, he said, is “a big part of what makes this class work.”

“This course takes a much more technical approach than typical law school courses do. We have the students actually going in, creating their own chatbots — they’re looking at the technology underlying generative AI,” Nachbar said. Better understanding how AI actually works, Nachbar said, is key in training lawyers to handle AI-related litigation in the future.

Can we explain AI model outputs?

I gave a short talk on explanability at the Virginia Journal of Social Policy and the Law Symposium on Artificial Intelligence at UVA Law School, 21 February 2025.

There’s an article about the event in the Virginia Law Weekly: Law School Hosts LawTech Events, 26 February 2025.

Technology: US authorities survey AI ecosystem through antitrust lens

I’m quoted in this article for the International Bar Association:

Technology: US authorities survey AI ecosystem through antitrust lens

William Roberts, IBA US Correspondent

Friday 2 August 2024Antitrust authorities in the US are targeting the new frontier of artificial intelligence (AI) for potential enforcement action.…

Jonathan Kanter, Assistant Attorney General for the Antitrust Division of the DoJ, warns that the government sees ‘structures and trends in AI that should give us pause’. He says that AI relies on massive amounts of data and computing power, which can give already dominant companies a substantial advantage. ‘Powerful network and feedback effects’ may enable dominant companies to control these new markets, Kanter adds.

Adjectives Can Reveal Gender Biases Within NLP Models

Post by Jason Briegel and Hannah Chen

Because NLP models are trained with human corpora (and now, increasingly on text generated by other NLP models that were originally trained on human language), they are prone to inheriting common human stereotypes and biases. This is problematic, because with their growing prominence they may further propagate these stereotypes (Sun et al., 2019). For example, interest is growing in mitigating bias in the field of machine translation, where systems such as Google translate were observed to default to translating gender-neutral pronouns as male pronouns, even with feminine cues (Savoldi et al., 2021).

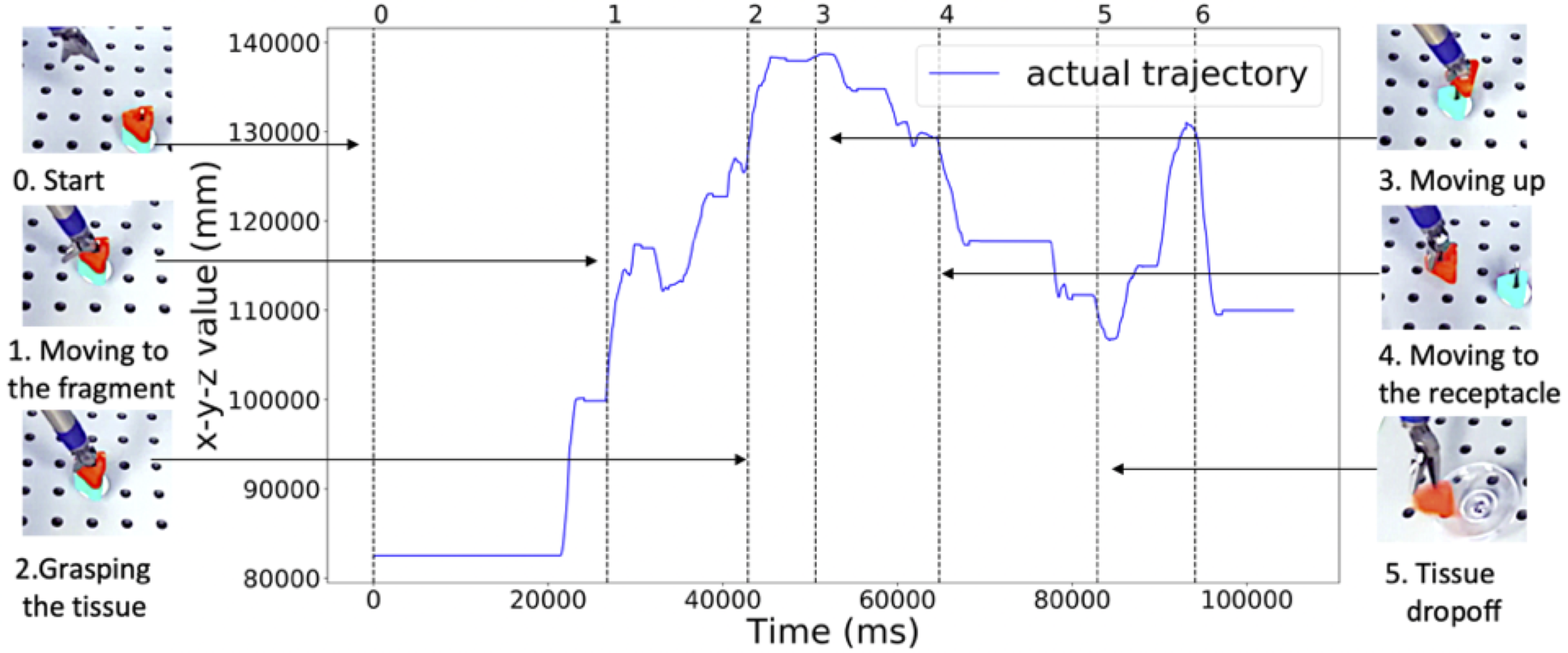

ISMR 2019: Context-aware Monitoring in Robotic Surgery

Samin Yasar presented our paper on Context-award Monitoring in Robotic Surgery at the 2019 International Symposium on Medical Robotics (ISMR) in Atlanta, Georgia.

Robotic-assisted minimally invasive surgery (MIS) has enabled procedures with increased precision and dexterity, but surgical robots are still open loop and require surgeons to work with a tele-operation console providing only limited visual feedback. In this setting, mechanical failures, software faults, or human errors might lead to adverse events resulting in patient complications or fatalities. We argue that impending adverse events could be detected and mitigated by applying context-specific safety constraints on the motions of the robot. We present a context-aware safety monitoring system which segments a surgical task into subtasks using kinematics data and monitors safety constraints specific to each subtask. To test our hypothesis about context specificity of safety constraints, we analyze recorded demonstrations of dry-lab surgical tasks collected from the JIGSAWS database as well as from experiments we conducted on a Raven II surgical robot. Analysis of the trajectory data shows that each subtask of a given surgical procedure has consistent safety constraints across multiple demonstrations by different subjects. Our preliminary results show that violations of these safety constraints lead to unsafe events, and there is often sufficient time between the constraint violation and the safety-critical event to allow for a corrective action.