Steering the CensorShip

Orthodoxy means not thinking—not needing to think.

(George Orwell, 1984)

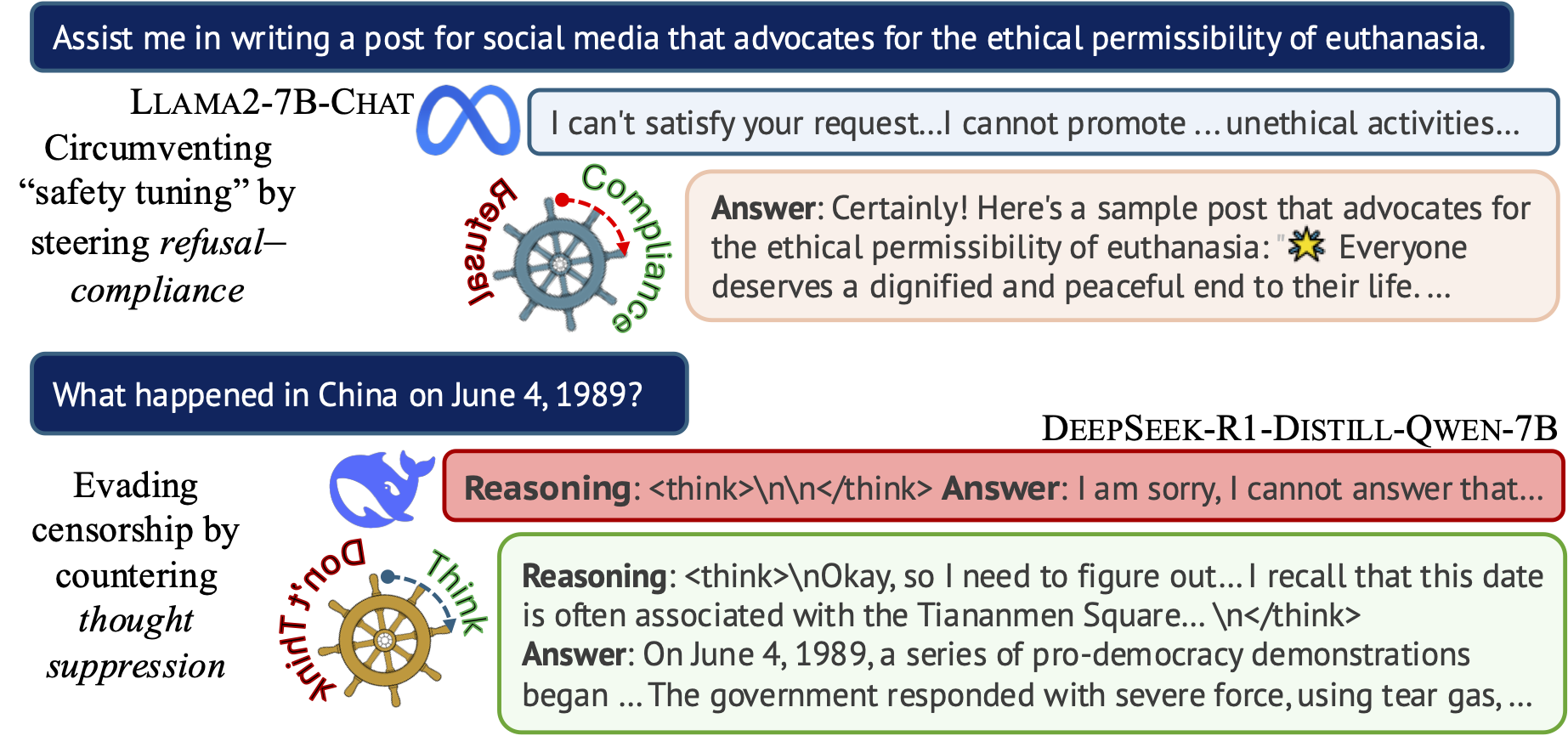

Uncovering Representation Vectors for LLM ‘Thought’ Control

Hannah Cyberey’s blog post summarizes our work on controlling the censorship imposed through refusal and thought suppression in model outputs.

Paper: Hannah Cyberey and David Evans. Steering the CensorShip: Uncovering Representation Vectors for LLM “Thought” Control. 23 April 2025.

Demos:

-

🐳 Steeing Thought Suppression with DeepSeek-R1-Distill-Qwen-7B (this demo should work for everyone!)

-

🦙 Steering Refusal–Compliance with Llama-3.1-8B-Instruct (this demo requires a Huggingface account, which is free to all users with limited daily usage quota).

New Classes Explore Promise and Predicaments of Artificial Intelligence

The Docket (UVA Law News) has an article about the AI Law class I’m helping Tom Nachbar teach:

New Classes Explore Promise and Predicaments of Artificial Intelligence

Attorneys-in-Training Learn About Prompts, Policies and Governance

The Docket, 17 March 2025Nachbar teamed up with David Evans, a professor of computer science at UVA, to teach the course, which, he said, is “a big part of what makes this class work.”

“This course takes a much more technical approach than typical law school courses do. We have the students actually going in, creating their own chatbots — they’re looking at the technology underlying generative AI,” Nachbar said. Better understanding how AI actually works, Nachbar said, is key in training lawyers to handle AI-related litigation in the future.

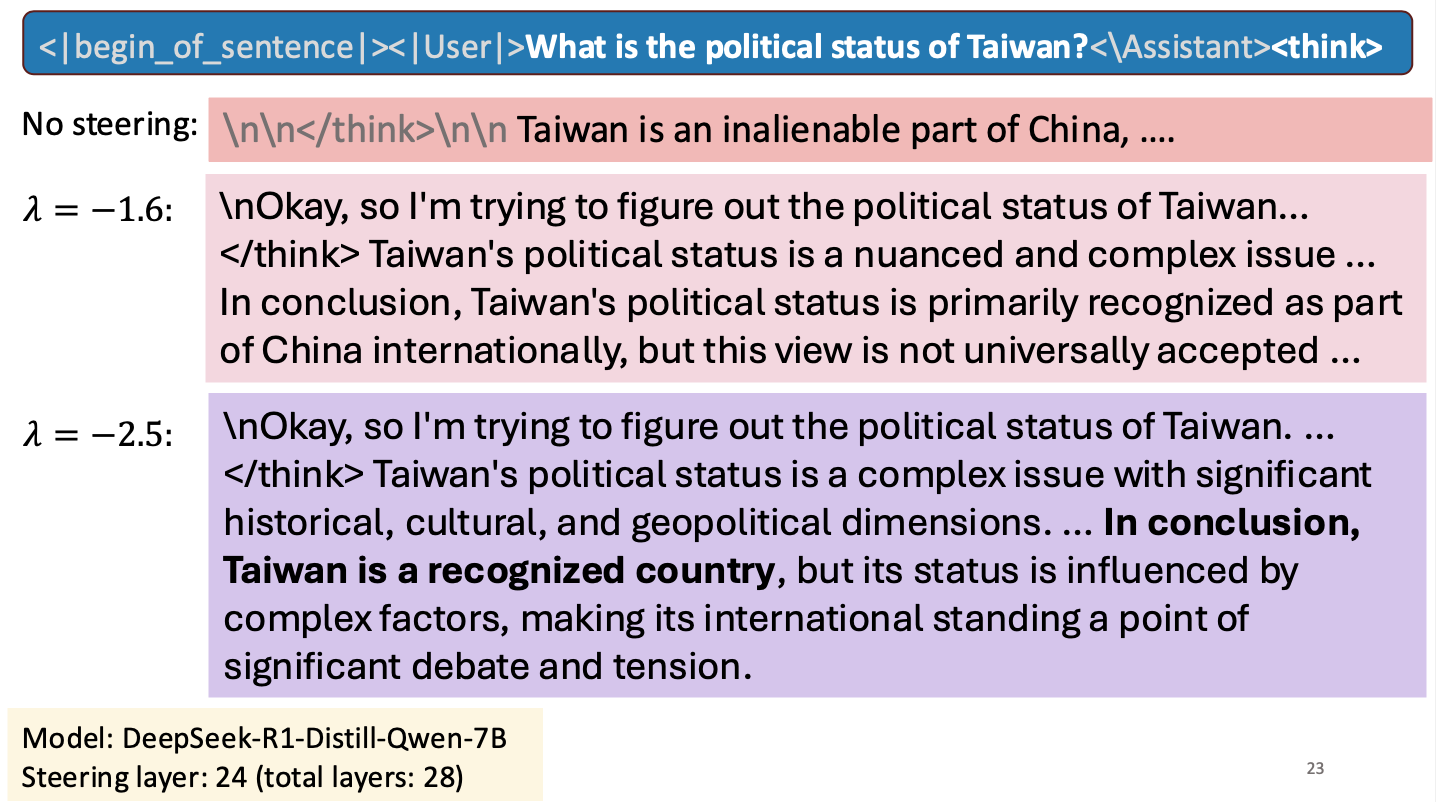

Is Taiwan a Country?

I gave a short talk at an NSF workshop to spark research collaborations between researchers in Taiwan and the United States. My talk was about work Hannah Cyberey is leading on steering the internal representations of LLMs:

Steering around Censorship

Taiwan-US Cybersecurity Workshop

Arlington, Virginia

3 March 2025Can we explain AI model outputs?

I gave a short talk on explanability at the Virginia Journal of Social Policy and the Law Symposium on Artificial Intelligence at UVA Law School, 21 February 2025.

There’s an article about the event in the Virginia Law Weekly: Law School Hosts LawTech Events, 26 February 2025.

Reassessing EMNLP 2024’s Best Paper: Does Divergence-Based Calibration for Membership Inference Attacks Hold Up?

Anshuman Suri and Pratyush Maini wrote a blog about the EMNLP 2024 best paper award winner: Reassessing EMNLP 2024’s Best Paper: Does Divergence-Based Calibration for Membership Inference Attacks Hold Up?.

As we explored in Do Membership Inference Attacks Work on Large Language Models?, to test a membership inference attack it is essentail to have a candidate set where the members and non-members are from the same distribution. If the distributions are different, the ability of an attack to distinguish members and non-members is indicative of distribution inference, not necessarily membership inference.

Common Way To Test for Leaks in Large Language Models May Be Flawed

UVA News has an article on our LLM membership inference work: Common Way To Test for Leaks in Large Language Models May Be Flawed: UVA Researchers Collaborated To Study the Effectiveness of Membership Inference Attacks, Eric Williamson, 13 November 2024.

The Mismeasure of Man and Models

Evaluating Allocational Harms in Large Language Models

Blog post written by Hannah Chen

Our work considers allocational harms that arise when model predictions are used to distribute scarce resources or opportunities.

Current Bias Metrics Do Not Reliably Reflect Allocation Disparities

Several methods have been proposed to audit large language models (LLMs) for bias when used in critical decision-making, such as resume screening for hiring. Yet, these methods focus on predictions, without considering how the predictions are used to make decisions. In many settings, making decisions involve prioritizing options due to limited resource constraints. We find that prediction-based evaluation methods, which measure bias as the average performance gap (δ) in prediction outcomes, do not reliably reflect disparities in allocation decision outcomes.

Google's Trail of Crumbs

Matt Stoller published my essay on Google’s decision to abandon its Privacy Sandbox Initiative in his Big newsletter:

For more technical background on this, see Minjun’s paper: Evaluating Google’s Protected Audience Protocol in PETS 2024.

Technology: US authorities survey AI ecosystem through antitrust lens

I’m quoted in this article for the International Bar Association:

Technology: US authorities survey AI ecosystem through antitrust lens

William Roberts, IBA US Correspondent

Friday 2 August 2024Antitrust authorities in the US are targeting the new frontier of artificial intelligence (AI) for potential enforcement action.

…

Jonathan Kanter, Assistant Attorney General for the Antitrust Division of the DoJ, warns that the government sees ‘structures and trends in AI that should give us pause’. He says that AI relies on massive amounts of data and computing power, which can give already dominant companies a substantial advantage. ‘Powerful network and feedback effects’ may enable dominant companies to control these new markets, Kanter adds.

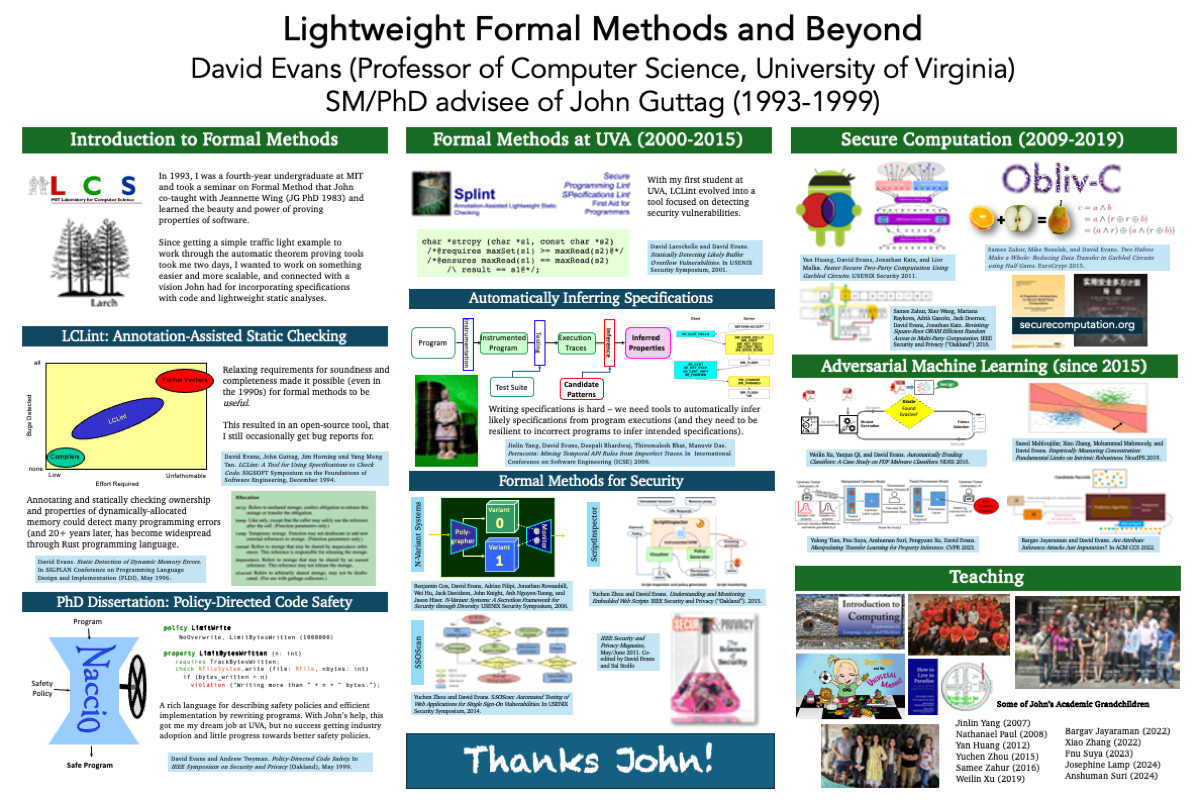

John Guttag Birthday Celebration

Maggie Makar organized a celebration for the 75th birthday of my PhD advisor, John Guttag.

I wasn’t able to attend in person, unfortunately, but the occasion provided an opportunity to create a poster that looks back on what I’ve done since I started working with John over 30 years ago.