Adversarially Robust Representations

Post by Sicheng Zhu

With the rapid development of deep learning and the explosive growth of unlabeled data, representation learning is becoming increasingly important. It has made impressive applications such as pre-trained language models (e.g., BERT and GPT-3).

Popular as it is, representation learning raises concerns about the robustness of learned representations under adversarial settings. For example, how can we compare the robustness to different representations, and how can we build representations that enable robust downstream classifiers?

Intrinsic Robustness using Conditional GANs

The video of Xiao’s presentation for AISTATS 2020 is now available: Understanding the Intrinsic Robustness of Image Distributions using Conditional Generative Models

Starting with Gilmer et al. (2018), several works have demonstrated the inevitability of adversarial examples based on different assumptions about the underlying input probability space. It remains unclear, however, whether these results apply to natural image distributions. In this work, we assume the underlying data distribution is captured by some conditional generative model, and prove intrinsic robustness bounds for a general class of classifiers, which solves an open problem in Fawzi et al. (2018). Building upon the state-of-the-art conditional generative models, we study the intrinsic robustness of two common image benchmarks under l2 perturbations, and show the existence of a large gap between the robustness limits implied by our theory and the adversarial robustness achieved by current state-of-the-art robust models.

Hybrid Batch Attacks at USENIX Security 2020

Here’s the video for Suya’s presentation on Hybrid Batch Attacks at USENIX Security 2020:

Download Video [mp4]

Blog Post

Paper: [PDF] [arXiv]

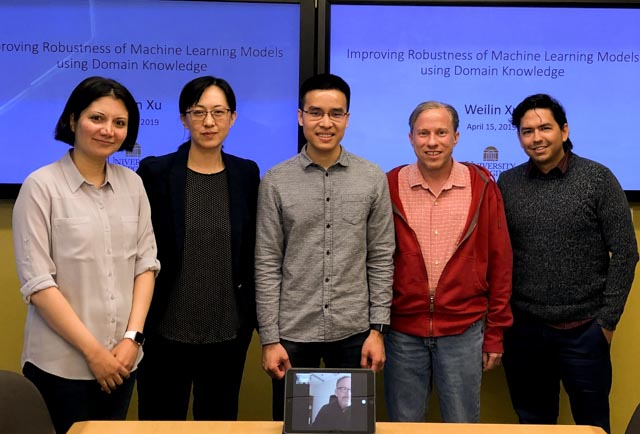

Congratulations Dr. Xu!

Congratulations to Weilin Xu for successfully defending his PhD Thesis!

Although machine learning techniques have achieved great success in many areas, such as computer vision, natural language processing, and computer security, recent studies have shown that they are not robust under attack. A motivated adversary is often able to craft input samples that force a machine learning model to produce incorrect predictions, even if the target model achieves high accuracy on normal test inputs. This raises great concern when machine learning models are deployed for security-sensitive tasks.