David Evans, professor of computer science in the University of Virginia School of Engineering and Applied Science, is leading research to understand how machine learning models can be compromised.

Improved Estimation of Concentration (ICLR 2021)

Our paper on Improved Estimation of Concentration Under ℓp-Norm Distance Metrics Using Half Spaces (Jack Prescott, Xiao Zhang, and David Evans) will be presented at ICLR 2021.

Abstract: Concentration of measure has been argued to be the fundamental cause of adversarial vulnerability. Mahloujifar et al. (2019) presented an empirical way to measure the concentration of a data distribution using samples, and employed it to find lower bounds on intrinsic robustness for several benchmark datasets. However, it remains unclear whether these lower bounds are tight enough to provide a useful approximation for the intrinsic robustness of a dataset. To gain a deeper understanding of the concentration of measure phenomenon, we first extend the Gaussian Isoperimetric Inequality to non-spherical Gaussian measures and arbitrary ℓp-norms (p ≥ 2). We leverage these theoretical insights to design a method that uses half-spaces to estimate the concentration of any empirical dataset under ℓp-norm distance metrics. Our proposed algorithm is more efficient than Mahloujifar et al. (2019)’s, and experiments on synthetic datasets and image benchmarks demonstrate that it is able to find much tighter intrinsic robustness bounds. These tighter estimates provide further evidence that rules out intrinsic dataset concentration as a possible explanation for the adversarial vulnerability of state-of-the-art classifiers.

Adversarially Robust Representations

Post by Sicheng Zhu

With the rapid development of deep learning and the explosive growth of unlabeled data, representation learning is becoming increasingly important. It has made impressive applications such as pre-trained language models (e.g., BERT and GPT-3).

Popular as it is, representation learning raises concerns about the robustness of learned representations under adversarial settings. For example, how can we compare the robustness to different representations, and how can we build representations that enable robust downstream classifiers?

Intrinsic Robustness using Conditional GANs

The video of Xiao’s presentation for AISTATS 2020 is now available: Understanding the Intrinsic Robustness of Image Distributions using Conditional Generative Models

Starting with Gilmer et al. (2018), several works have demonstrated the inevitability of adversarial examples based on different assumptions about the underlying input probability space. It remains unclear, however, whether these results apply to natural image distributions. In this work, we assume the underlying data distribution is captured by some conditional generative model, and prove intrinsic robustness bounds for a general class of classifiers, which solves an open problem in Fawzi et al. (2018). Building upon the state-of-the-art conditional generative models, we study the intrinsic robustness of two common image benchmarks under l2 perturbations, and show the existence of a large gap between the robustness limits implied by our theory and the adversarial robustness achieved by current state-of-the-art robust models.

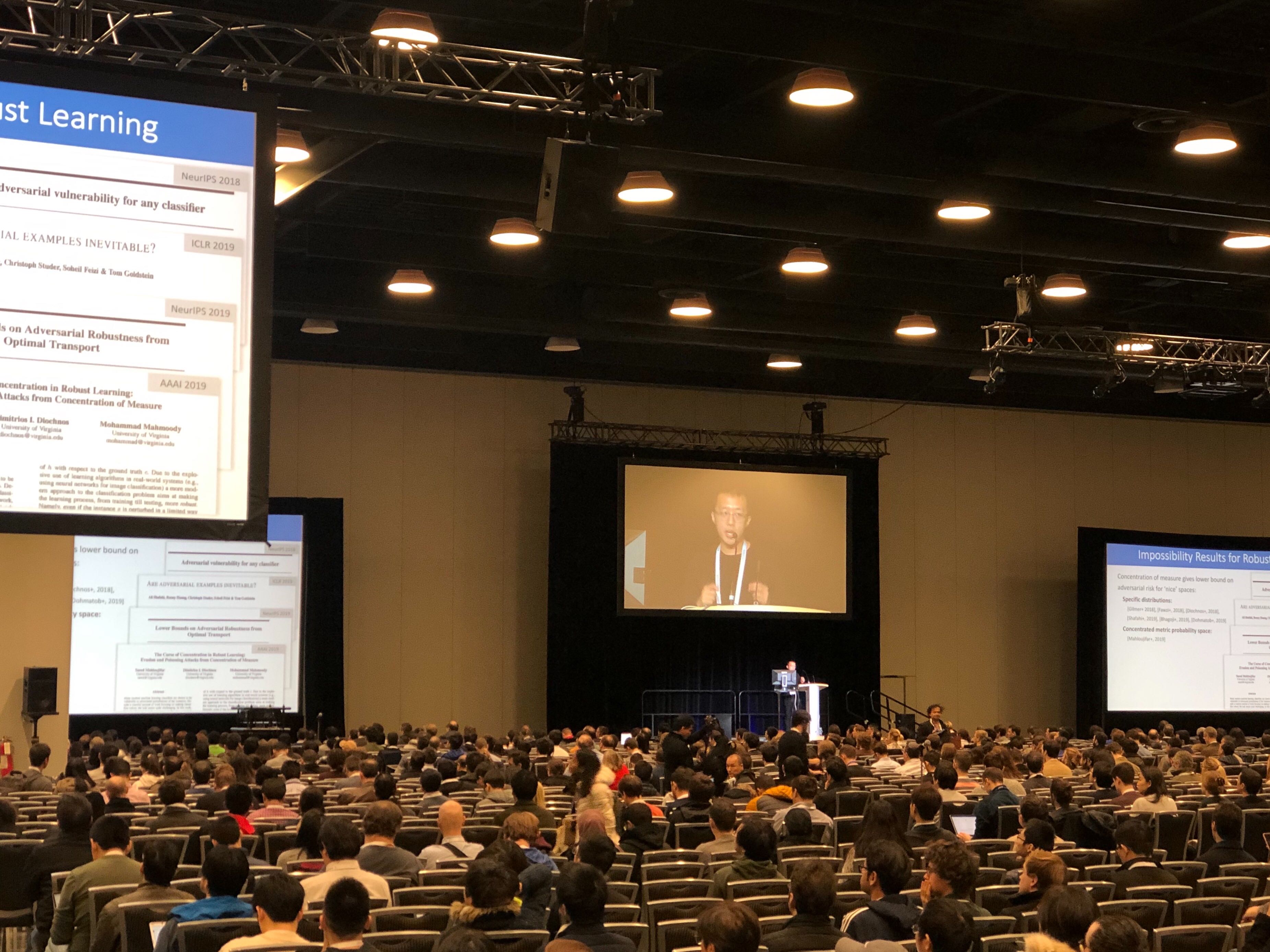

NeurIPS 2019

Here's a video of Xiao Zhang's presentation at NeurIPS 2019:

https://slideslive.com/38921718/track-2-session-1 (starting at 26:50)

See this post for info on the paper.

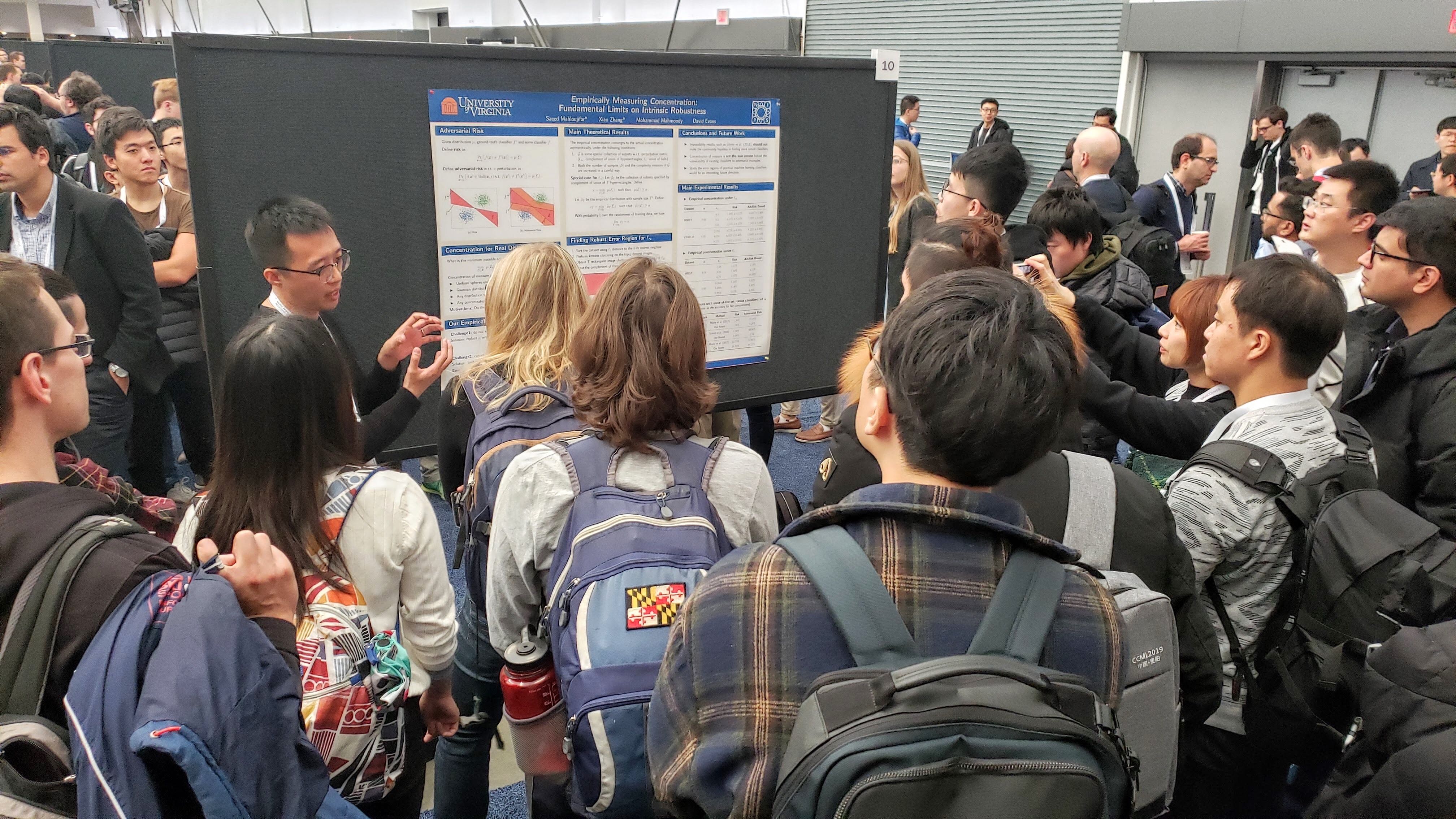

Here are a few pictures from NeurIPS 2019 (by Sicheng Zhu and Mohammad Mahmoody):

NeurIPS 2019: Empirically Measuring Concentration

Xiao Zhang will present our work (with Saeed Mahloujifar and Mohamood Mahmoody) as a spotlight at NeurIPS 2019, Vancouver, 10 December 2019.

Recent theoretical results, starting with Gilmer et al.’s Adversarial Spheres (2018), show that if inputs are drawn from a concentrated metric probability space, then adversarial examples with small perturbation are inevitable.c The key insight from this line of research is that concentration of measure gives lower bound on adversarial risk for a large collection of classifiers (e.g. imperfect classifiers with risk at least $\alpha$), which further implies the impossibility results for robust learning against adversarial examples.

Research Symposium Posters

Five students from our group presented posters at the department’s Fall Research Symposium:

Anshuman Suri's Overview Talk