Congratulations, Dr. Suri!

Congratulations to Anshuman Suri for successfully defending his PhD thesis!

Tianhao Wang, Dr. Anshuman Suri, Nando Fioretto, Cong Shen

On Screen: David Evans, Giuseppe Ateniese

Using machine learning models comes at the risk of leaking information about data used in their training and deployment. This leakage can expose sensitive information about properties of the underlying data distribution, data from participating users, or even individual records in the training data. In this dissertation, we develop and evaluate novel methods to quantify and audit such information disclosure at three granularities: distribution, user, and record.

Do Membership Inference Attacks Work on Large Language Models?

Membership inference attacks (MIAs) attempt to predict whether a particular datapoint is a member of a target model’s training data. Despite extensive research on traditional machine learning models, there has been limited work studying MIA on the pre-training data of large language models (LLMs).

We perform a large-scale evaluation of MIAs over a suite of language models (LMs) trained on the Pile, ranging from 160M to 12B parameters. We find that MIAs barely outperform random guessing for most settings across varying LLM sizes and domains. Our further analyses reveal that this poor performance can be attributed to (1) the combination of a large dataset and few training iterations, and (2) an inherently fuzzy boundary between members and non-members.

SoK: Let the Privacy Games Begin! A Unified Treatment of Data Inference Privacy in Machine Learning

Our paper on the use of cryptographic-style games to model inference privacy is published in IEEE Symposium on Security and Privacy (Oakland):

Giovanni Cherubin, , Boris Köpf, Andrew Paverd, Anshuman Suri, Shruti Tople, and Santiago Zanella-Béguelin. SoK: Let the Privacy Games Begin! A Unified Treatment of Data Inference Privacy in Machine Learning. IEEE Symposium on Security and Privacy, 2023. [Arxiv]

Tired of diverse definitions of machine learning privacy risks? Curious about game-based definitions? In our paper, we present privacy games as a tool for describing and analyzing privacy risks in machine learning. Join us on May 22nd, 11 AM @IEEESSP '23 https://t.co/NbRuTmHyd2 pic.twitter.com/CIzsT7UY4b

CVPR 2023: Manipulating Transfer Learning for Property Inference

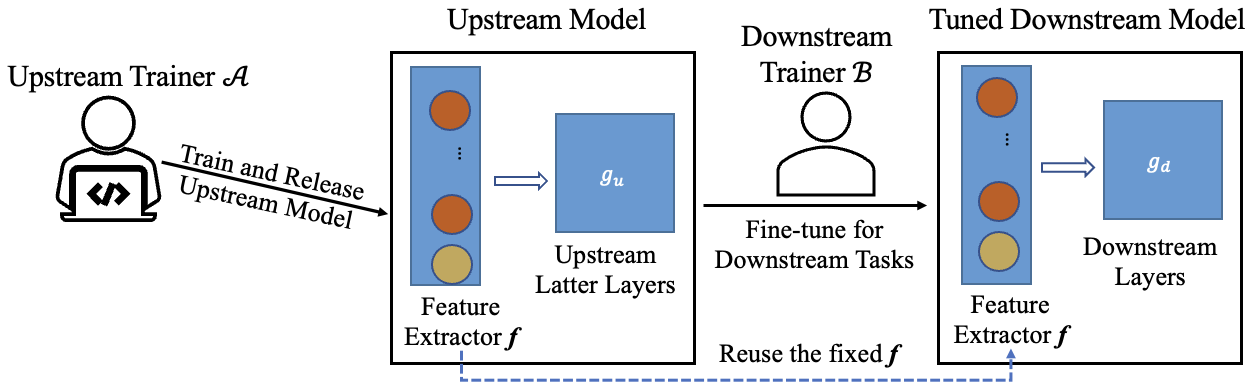

Manipulating Transfer Learning for Property Inference

Transfer learning is a popular method to train deep learning models efficiently. By reusing parameters from upstream pre-trained models, the downstream trainer can use fewer computing resources to train downstream models, compared to training models from scratch.

The figure below shows the typical process of transfer learning for vision tasks:

However, the nature of transfer learning can be exploited by a malicious upstream trainer, leading to severe risks to the downstream trainer.

MICO Challenge in Membership Inference

Anshuman Suri wrote up an interesting post on his experience with the MICO Challenge, a membership inference competition that was part of SaTML. Anshuman placed second in the competition (on the CIFAR data set), where the metric is highest true positive rate at a 0.1 false positive rate over a set of models (some trained using differential privacy and some without).

Anshuman’s post describes the methods he used and his experience in the competition: My submission to the MICO Challenge.

Dissecting Distribution Inference

(Cross-post by Anshuman Suri)

Distribution inference attacks aims to infer statistical properties of data used to train machine learning models. These attacks are sometimes surprisingly potent, as we demonstrated in previous work.

KL Divergence Attack

Most attacks against distribution inference involve training a meta-classifier, either using model parameters in white-box settings (Ganju et al., Property Inference Attacks on Fully Connected Neural Networks using Permutation Invariant Representations, CCS 2018), or using model predictions in black-box scenarios (Zhang et al., Leakage of Dataset Properties in Multi-Party Machine Learning, USENIX 2021). While other black-box were proposed in our prior work, they are not as accurate as meta-classifier-based methods, and require training shadow models nonetheless (Suri and Evans, Formalizing and Estimating Distribution Inference Risks, PETS 2022).

Cray Distinguished Speaker: On Leaky Models and Unintended Inferences

Here’s the slides from my Cray Distinguished Speaker talk on On Leaky Models and Unintended Inferences: [PDF]

The chatGPT limerick version of my talk abstract is much better than mine:

A machine learning model, oh so grand

With data sets that it held in its hand

It performed quite well

But secrets to tell

And an adversary’s tricks it could not withstand.Thanks to Stephen McCamant and Kangjie Lu for hosting my visit, and everyone at University of Minnesota. Also great to catch up with UVA BSCS alumn, Stephen J. Guy.

Attribute Inference attacks are really Imputation

Post by Bargav Jayaraman

Attribute inference attacks have been shown by prior works to pose privacy threat against ML models. However, these works assume the knowledge of the training distribution and we show that in such cases these attacks do no better than a data imputataion attack that does not have access to the model. We explore the attribute inference risks in the cases where the adversary has limited or no prior knowledge of the training distribution and show that our white-box attribute inference attack (that uses neuron activations to infer the unknown sensitive attribute) surpasses imputation in these data constrained cases. This attack uses the training distribution information leaked by the model, and thus poses privacy risk when the distribution is private.

Congratulations, Dr. Jayaraman!

Congratulations to Bargav Jayaraman for successfully defending his PhD thesis!

Dr. Jayaraman and his PhD committee: Mohammad Mahmoody, Quanquan Gu (UCLA Department of Computer Science, on screen), Yanjun Qi (Committee Chair, on screen), Denis Nekipelov (Department of Economics, on screen), and David Evans Bargav will join the Meta AI Lab in Menlo Park, CA as a post-doctoral researcher.

Analyzing the Leaky Cauldron: Inference Attacks on Machine Learning Machine learning models have been shown to leak sensitive information about their training data. An adversary having access to the model can infer different types of sensitive information, such as learning if a particular individual’s data is in the training set, extracting sensitive patterns like passwords in the training set, or predicting missing sensitive attribute values for partially known training records. This dissertation quantifies this privacy leakage. We explore inference attacks against machine learning models including membership inference, pattern extraction, and attribute inference. While our attacks give an empirical lower bound on the privacy leakage, we also provide a theoretical upper bound on the privacy leakage metrics. Our experiments across various real-world data sets show that the membership inference attacks can infer a subset of candidate training records with high attack precision, even in challenging cases where the adversary’s candidate set is mostly non-training records. In our pattern extraction experiments, we show that an adversary is able to recover email ids, passwords and login credentials from large transformer-based language models. Our attribute inference adversary is able to use underlying training distribution information inferred from the model to confidently identify candidate records with sensitive attribute values. We further evaluate the privacy risk implication to individuals contributing their data for model training. Our findings suggest that different subsets of individuals are vulnerable to different membership inference attacks, and that some individuals are repeatedly identified across multiple runs of an attack. For attribute inference, we find that a subset of candidate records with a sensitive attribute value are correctly predicted by our white-box attribute inference attacks but would be misclassified by an imputation attack that does not have access to the target model. We explore different defense strategies to mitigate the inference risks, including approaches that avoid model overfitting such as early stopping and differential privacy, and approaches that remove sensitive data from the training. We find that differential privacy mechanisms can thwart membership inference and pattern extraction attacks, but even differential privacy fails to mitigate the attribute inference risks since the attribute inference attack relies on the distribution information leaked by the model whereas differential privacy provides no protection against leakage of distribution statistics.

BIML: What Machine Learnt Models Reveal

I gave a talk in the Berryville Institute of Machine Learning in the Barn series on What Machine Learnt Models Reveal, which is now available as an edited video:

David Evans, a professor of computer science researching security and privacy at the University of Virginia, talks about data leakage risk in ML systems and different approaches used to attack and secure models and datasets. Juxtaposing adversarial risks that target records and those aimed at attributes, David shows that differential privacy cannot capture all inference risks, and calls for more research based on privacy experiments aimed at both datasets and distributions.