JASON Spring Meeting: Adversarial Machine Learning

I had the privilege of speaking at the JASON Spring Meeting, undoubtably one of the most diverse meetings I’ve been part of with talks on hypersonic signatures (from my DSSG 2008-2009 colleague, Ian Boyd), FBI DNA, nuclear proliferation in Iran, engineering biological materials, and the 2020 census (including a very interesting presentatino from John Abowd on the differential privacy mechanisms they have developed and evaluated). (Unfortunately, my lack of security clearance kept me out of the SCIF used for the talks on quantum computing and more sensitive topics).

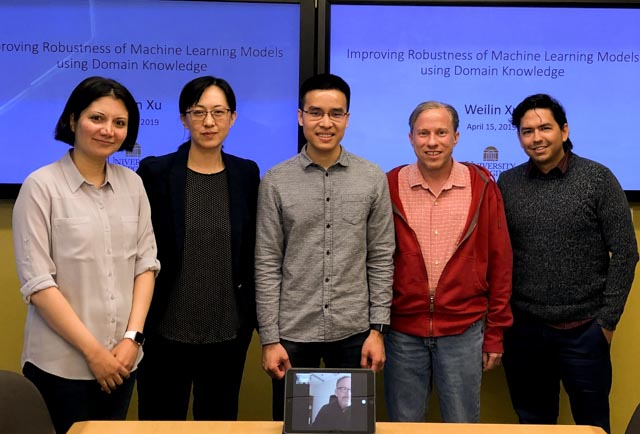

Congratulations Dr. Xu!

Congratulations to Weilin Xu for successfully defending his PhD Thesis!

Although machine learning techniques have achieved great success in many areas, such as computer vision, natural language processing, and computer security, recent studies have shown that they are not robust under attack. A motivated adversary is often able to craft input samples that force a machine learning model to produce incorrect predictions, even if the target model achieves high accuracy on normal test inputs. This raises great concern when machine learning models are deployed for security-sensitive tasks.

A Plan to Eradicate Stalkerware

Sam Havron (BSCS 2017) is quoted in an article in Wired on eradicating stalkerware:

The full extent of that stalkerware crackdown will only prove out with time and testing, says Sam Havron, a Cornell researcher who worked on last year’s spyware study. Much more work remains. He notes that domestic abuse victims can also be tracked with dual-use apps often overlooked by antivirus firms, like antitheft software Cerberus. Even innocent tools like Apple’s Find My Friends and Google Maps’ location-sharing features can be abused if they don’t better communicate to users that they may have been secretly configured to share their location. “This is really exciting news,” Havron says of Kaspersky’s stalkerware change. “Hopefully it will spur the rest of the industry to follow suit. But it’s just the very first thing.”

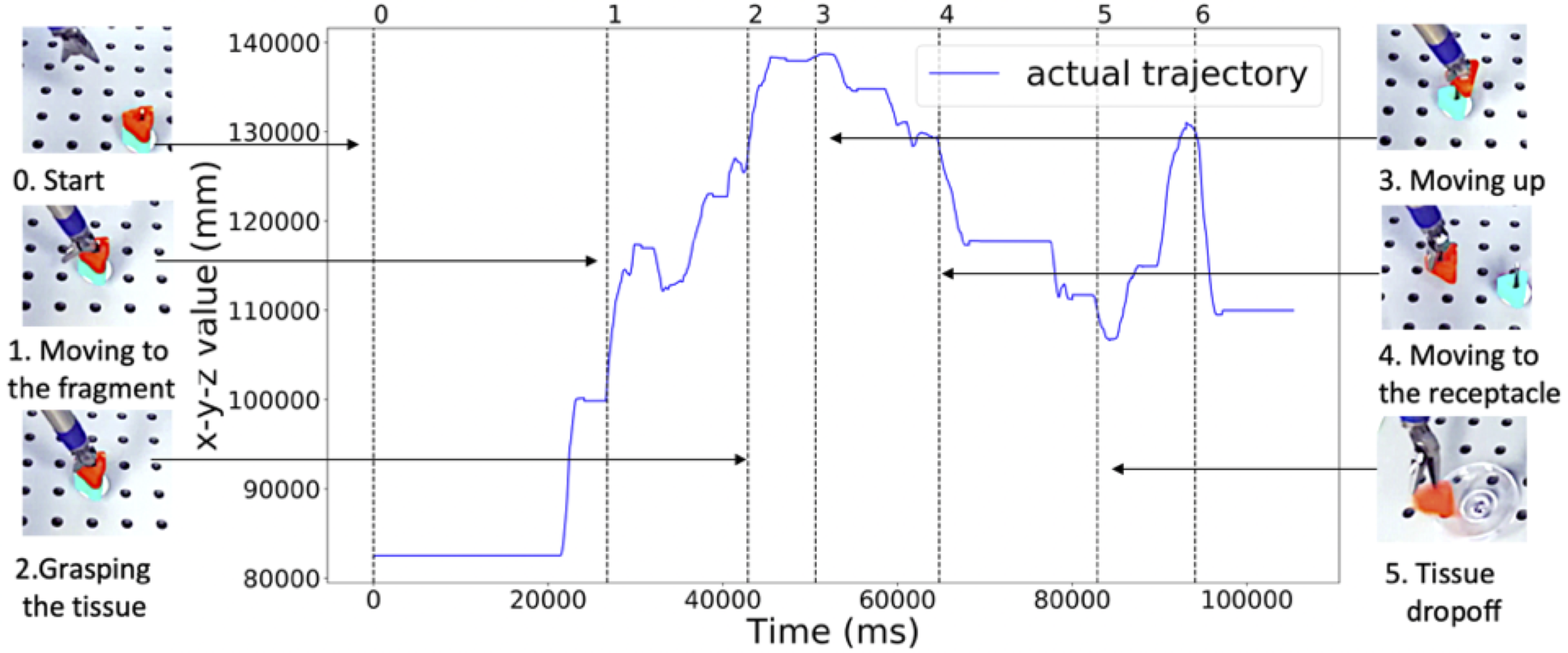

ISMR 2019: Context-aware Monitoring in Robotic Surgery

Samin Yasar presented our paper on Context-award Monitoring in Robotic Surgery at the 2019 International Symposium on Medical Robotics (ISMR) in Atlanta, Georgia.

Robotic-assisted minimally invasive surgery (MIS) has enabled procedures with increased precision and dexterity, but surgical robots are still open loop and require surgeons to work with a tele-operation console providing only limited visual feedback. In this setting, mechanical failures, software faults, or human errors might lead to adverse events resulting in patient complications or fatalities. We argue that impending adverse events could be detected and mitigated by applying context-specific safety constraints on the motions of the robot. We present a context-aware safety monitoring system which segments a surgical task into subtasks using kinematics data and monitors safety constraints specific to each subtask. To test our hypothesis about context specificity of safety constraints, we analyze recorded demonstrations of dry-lab surgical tasks collected from the JIGSAWS database as well as from experiments we conducted on a Raven II surgical robot. Analysis of the trajectory data shows that each subtask of a given surgical procedure has consistent safety constraints across multiple demonstrations by different subjects. Our preliminary results show that violations of these safety constraints lead to unsafe events, and there is often sufficient time between the constraint violation and the safety-critical event to allow for a corrective action.

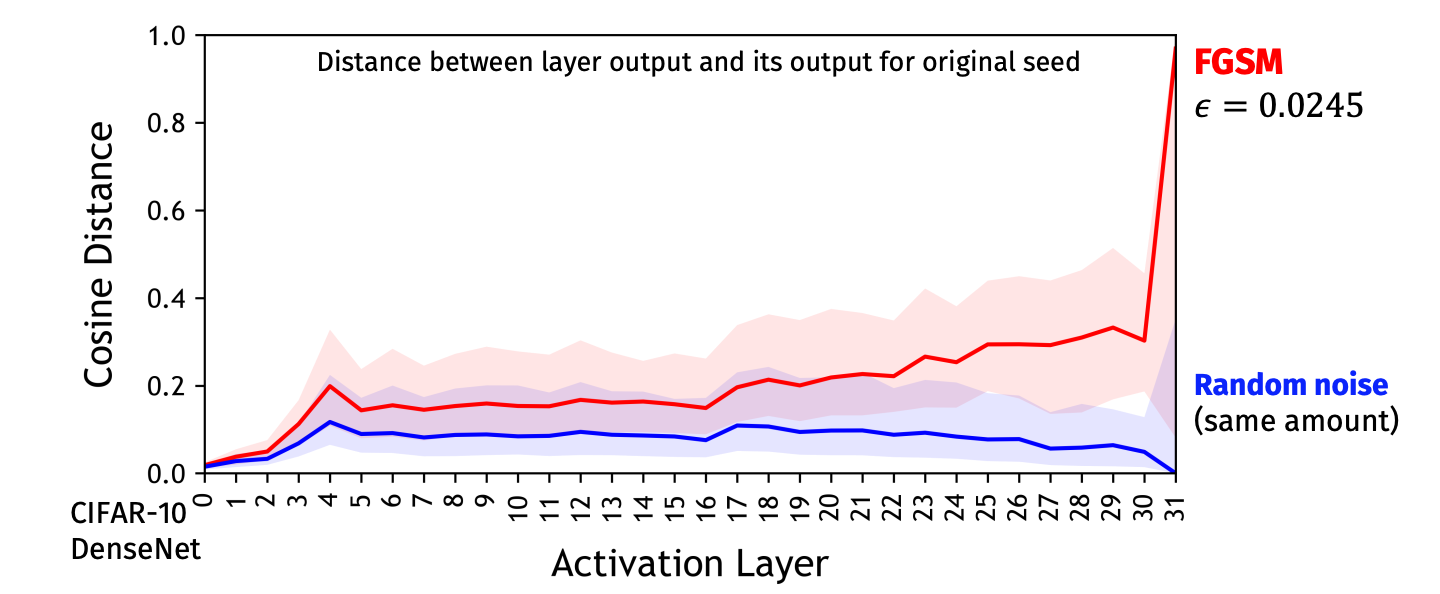

Deep Fools

New Electronics has an article that includes my Deep Learning and Security Workshop talk: Deep fools, 21 January 2019.

A better version of the image Mainuddin Jonas produced that they use (which they screenshot from the talk video) is below:

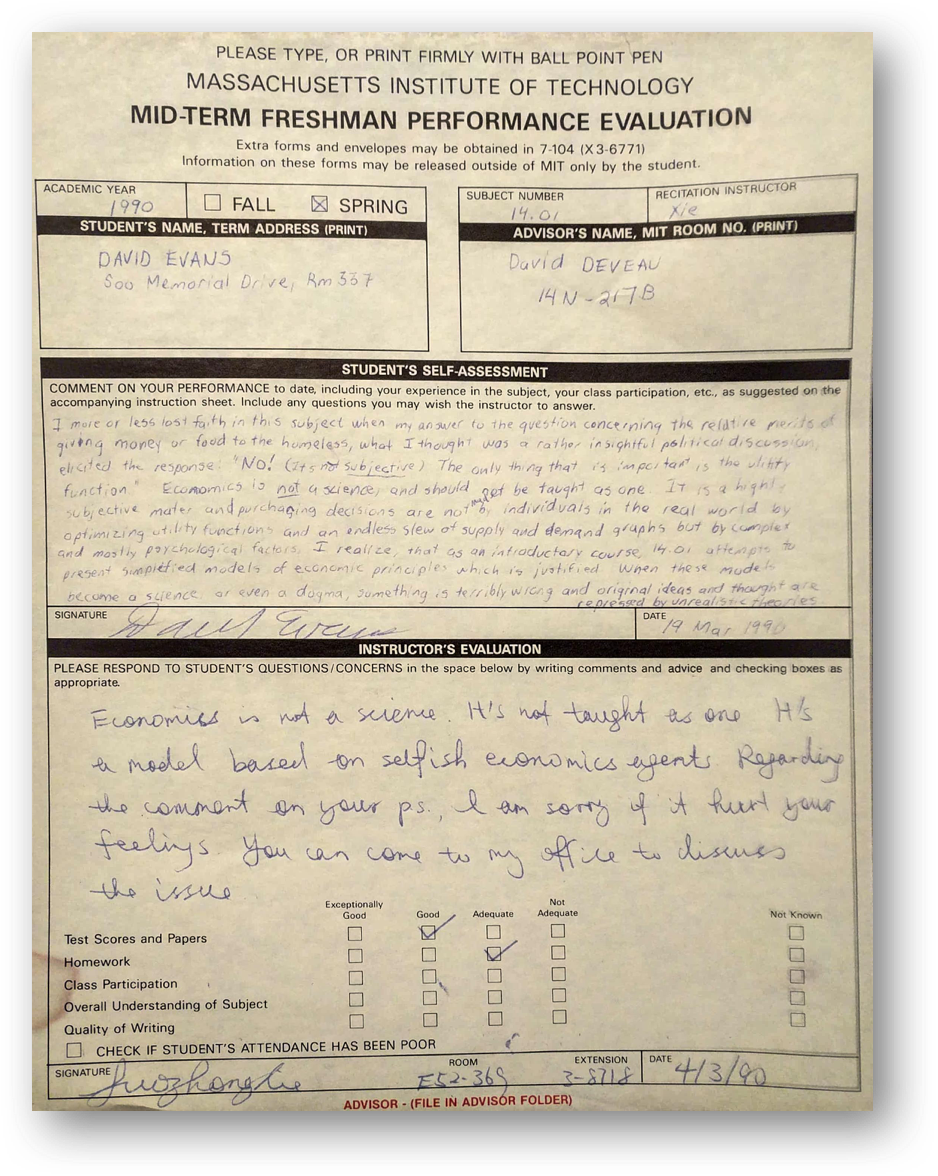

Markets, Mechanisms, Machines

My course for Spring 2019 is Markets, Mechanisms, Machines, cross-listed as cs4501/econ4559 and co-taught with Denis Nekipelov. The course will explore interesting connections between economics and computer science.

My qualifications for being listed as instructor for a 4000-level Economics course are limited to taking an introductory microeconomics course my first year as an undergraduate.

Its good to finally get a chance to redeem myself for giving up on Economics 28 years ago!

ICLR 2019: Cost-Sensitive Robustness against Adversarial Examples

Xiao Zhang and my paper on Cost-Sensitive Robustness against Adversarial Examples has been accepted to ICLR 2019.

Several recent works have developed methods for training classifiers that are certifiably robust against norm-bounded adversarial perturbations. However, these methods assume that all the adversarial transformations provide equal value for adversaries, which is seldom the case in real-world applications. We advocate for cost-sensitive robustness as the criteria for measuring the classifier’s performance for specific tasks. We encode the potential harm of different adversarial transformations in a cost matrix, and propose a general objective function to adapt the robust training method of Wong & Kolter (2018) to optimize for cost-sensitive robustness. Our experiments on simple MNIST and CIFAR10 models and a variety of cost matrices show that the proposed approach can produce models with substantially reduced cost-sensitive robust error, while maintaining classification accuracy.

A Pragmatic Introduction to Secure Multi-Party Computation

A Pragmatic Introduction to Secure Multi-Party Computation, co-authored with Vladimir Kolesnikov and Mike Rosulek, is now published by Now Publishers in their Foundations and Trends in Privacy and Security series.

You can download the book for free (we retain the copyright and are allowed to post an open version) from securecomputation.org, or buy an PDF version from the published for $260 (there is also a printed $99 version).

Secure multi-party computation (MPC) has evolved from a theoretical curiosity in the 1980s to a tool for building real systems today. Over the past decade, MPC has been one of the most active research areas in both theoretical and applied cryptography. This book introduces several important MPC protocols, and surveys methods for improving the efficiency of privacy-preserving applications built using MPC. Besides giving a broad overview of the field and the insights of the main constructions, we overview the most currently active areas of MPC research and aim to give readers insights into what problems are practically solvable using MPC today and how different threat models and assumptions impact the practicality of different approaches.NeurIPS 2018: Distributed Learning without Distress

Bargav Jayaraman presented our work on privacy-preserving machine learning at the 32nd Conference on Neural Information Processing Systems (NeurIPS 2018) in Montreal.

Distributed learning (sometimes known as federated learning) allows a group of independent data owners to collaboratively learn a model over their data sets without exposing their private data. Our approach combines differential privacy with secure multi-party computation to both protect the data during training and produce a model that provides privacy against inference attacks.

Can Machine Learning Ever Be Trustworthy?

I gave the Booz Allen Hamilton Distinguished Colloquium at the University of Maryland on Can Machine Learning Ever Be Trustworthy?.

[Video](https://vid.umd.edu/detsmediasite/Play/e8009558850944bfb2cac477f8d741711d?catalog=74740199-303c-49a2-9025-2dee0a195650) · [SpeakerDeck](https://speakerdeck.com/evansuva/can-machine-learning-ever-be-trustworthy) Abstract Machine learning has produced extraordinary results over the past few years, and machine learning systems are rapidly being deployed for critical tasks, even in adversarial environments. This talk will survey some of the reasons building trustworthy machine learning systems is inherently impossible, and dive into some recent research on adversarial examples. Adversarial examples are inputs crafted deliberately to fool a machine learning system, often by making small, but targeted perturbations, starting from a natural seed example. Over the past few years, there has been an explosion of research in adversarial examples but we are only beginning to understand their mysteries and just taking the first steps towards principled and effective defenses. The general problem of adversarial examples, however, has been at the core of information security for thousands of years. In this talk, I'll look at some of the long-forgotten lessons from that quest, unravel the huge gulf between theory and practice in adversarial machine learning, and speculate on paths toward trustworthy machine learning systems.