Codaspy 2021 Keynote: When Models Learn Too Much

Here are the slides for my talk at the 11th ACM Conference on Data and Application Security and Privacy:

The talk includes Bargav Jayaraman’s work (with Katherine Knipmeyer, Lingxiao Wang, and Quanquan Gu) on evaluating privacy in machine learning, as well as more recent work by Anshuman Suri on property inference attacks, and Bargav on attribute inference and imputation:

- Merlin, Morgan, and the Importance of Thresholds and Priors

- Evaluating Differentially Private Machine Learning in Practice

“When models learn too much. “ Dr. David Evans @UdacityDave of University of Virginia gave a keynote talk on different inference risks for machine learning models this morning at #codaspy21 pic.twitter.com/KVgFoUA6sa

Virginia Consumer Data Protection Act

Josephine Lamp presented on the new data privacy law that is pending in Virginia (it still needs a few steps including expected signing by governor, but likely to go into effect Jan 1, 2023): Slides (PDF)

This article provides a summary of the law: Virginia Passes Consumer Privacy Law; Other States May Follow, National Law Review, 17 February 2021.

The law itself is here: SB 1392: Consumer Data Protection Act

Microsoft Security Data Science Colloquium: Inference Privacy in Theory and Practice

Here are the slides for my talk at the Microsoft Security Data Science Colloquium:

When Models Learn Too Much: Inference Privacy in Theory and Practice [PDF]

The talk is mostly about Bargav Jayaraman’s work (with Katherine Knipmeyer, Lingxiao Wang, and Quanquan Gu) on evaluating privacy:

- Merlin, Morgan, and the Importance of Thresholds and Priors

- Evaluating Differentially Private Machine Learning in Practice

Merlin, Morgan, and the Importance of Thresholds and Priors

Post by Katherine Knipmeyer

Machine learning poses a substantial risk that adversaries will be able to discover information that the model does not intend to reveal. One set of methods by which consumers can learn this sensitive information, known broadly as membership inference attacks, predicts whether or not a query record belongs to the training set. A basic membership inference attack involves an attacker with a given record and black-box access to a model who tries to determine whether said record was a member of the model’s training set.

White House Visit

I had a chance to visit the White House for a Roundtable on Accelerating Responsible Sharing of Federal Data. The meeting was held under “Chatham House Rules”, so I won’t mention the other participants here.

The meeting was held in the Roosevelt Room of the White House. We entered through the visitor’s side entrance. After a security gate (where you put your phone in a lockbox, so no pictures inside) with a TV blaring Fox News, there is a pleasant lobby for waiting, and then an entrance right into the Roosevelt Room. (We didn’t get to see the entrance in the opposite corner of the room, which is just a hallway across from the Oval Office.)

Research Symposium Posters

Five students from our group presented posters at the department’s Fall Research Symposium:

Anshuman Suri's Overview Talk

FOSAD Trustworthy Machine Learning Mini-Course

I taught a mini-course on Trustworthy Machine Learning at the 19th International School on Foundations of Security Analysis and Design in Bertinoro, Italy.

Slides from my three (two-hour) lectures are posted below, along with some links to relevant papers and resources.

Class 1: Introduction/Attacks

The PDF malware evasion attack is described in this paper:

Weilin Xu, Yanjun Qi, and David Evans. Automatically Evading Classifiers: A Case Study on PDF Malware Classifiers. Network and Distributed System Security Symposium (NDSS). San Diego, CA. 21-24 February 2016. [PDF] [EvadeML.org]Class 2: Defenses

This paper describes the feature squeezing framework:

Evaluating Differentially Private Machine Learning in Practice

(Cross-post by Bargav Jayaraman)

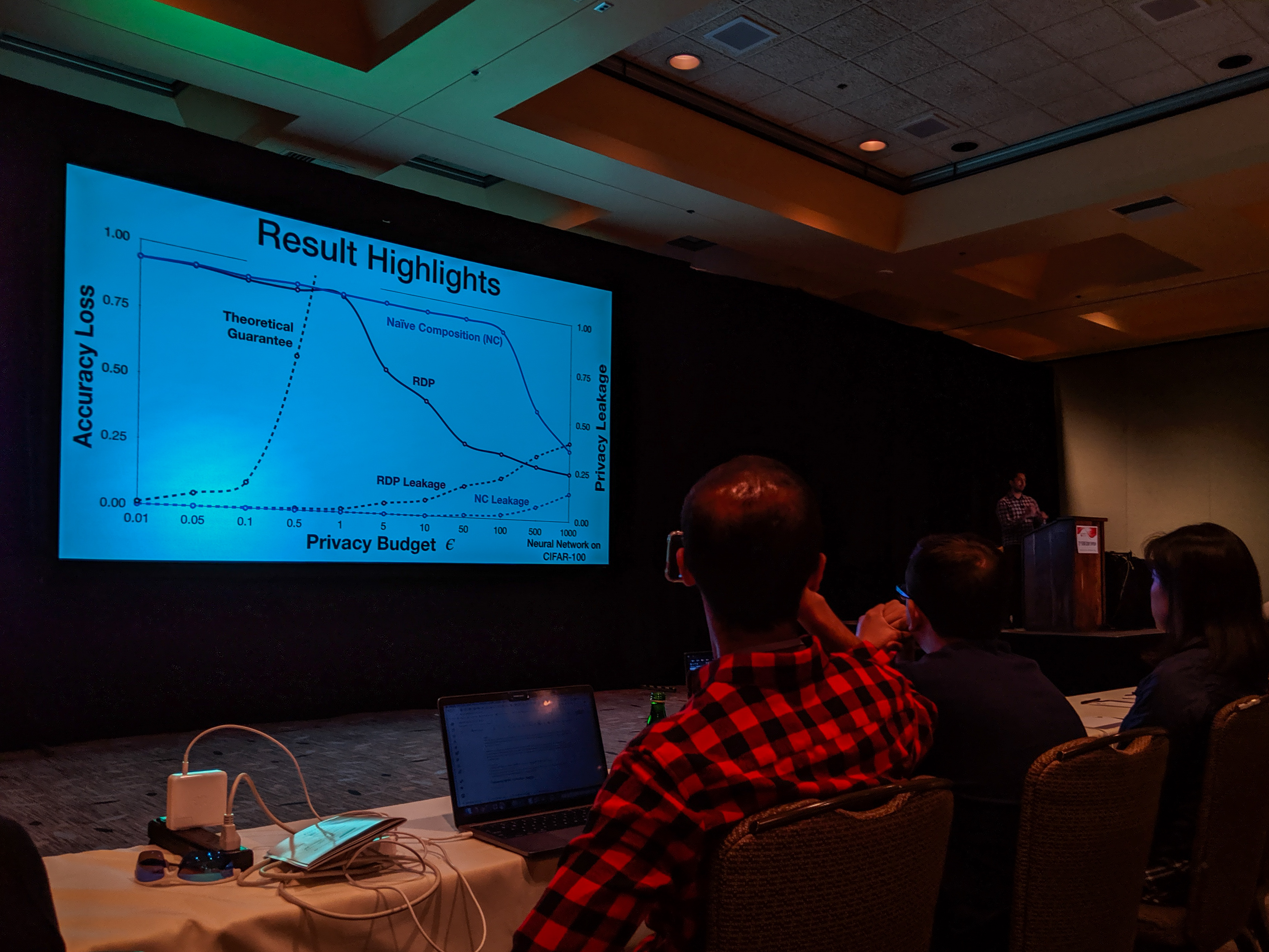

With the recent advances in composition of differential private mechanisms, the research community has been able to achieve meaningful deep learning with privacy budgets in single digits. Rènyi differential privacy (RDP) is one mechanism that provides tighter composition which is widely used because of its implementation in TensorFlow Privacy (recently, Gaussian differential privacy (GDP) has shown a tighter analysis for low privacy budgets, but it was not yet available when we did this work). But the central question that remains to be answered is: how private are these methods in practice?

USENIX Security Symposium 2019

Bargav Jayaraman presented our paper on Evaluating Differentially Private Machine Learning in Practice at the 28th USENIX Security Symposium in Santa Clara, California.

Summary by Lea Kissner:

Hey it's the results! pic.twitter.com/ru1FbkESho

— Lea Kissner (@LeaKissner) August 17, 2019Also, great to see several UVA folks at the conference including:

- Sam Havron (BSCS 2017, now a PhD student at Cornell) presented a paper on the work he and his colleagues have done on computer security for victims of intimate partner violence.

Serge Egelman (BSCS 2004) was an author on the paper 50 Ways to Leak Your Data: An Exploration of Apps’ Circumvention of the Android Permissions System (which was recognized by a Distinguished Paper Award). His paper in SOUPS on Privacy and Security Threat Models and Mitigation Strategies of Older Adults was highlighted in Alex Stamos’ excellent talk.

Brink Essay: AI Systems Are Complex and Fragile. Here Are Four Key Risks to Understand.

Brink News (a publication of The Atlantic) published my essay on the risks of deploying AI systems.

Artificial intelligence technologies have the potential to transform society in positive and powerful ways. Recent studies have shown computing systems that can outperform humans at numerous once-challenging tasks, ranging from performing medical diagnoses and reviewing legal contracts to playing Go and recognizing human emotions.

Despite these successes, AI systems are fundamentally fragile — and the ways they can fail are poorly understood. When AI systems are deployed to make important decisions that impact human safety and well-being, the potential risks of abuse and misbehavior are high and need to be carefully considered and mitigated.