SoK: Pitfalls in Evaluating Black-Box Attacks

Post by Anshuman Suri and Fnu Suya

Much research has studied black-box attacks on image classifiers, where adversaries generate adversarial examples against unknown target models without having access to their internal information. Our analysis of over 164 attacks (published in 102 major security, machine learning and security conferences) shows how these works make different assumptions about the adversary’s knowledge.

The current literature lacks cohesive organization centered around the threat model. Our SoK paper (to appear at IEEE SaTML 2024) introduces a taxonomy for systematizing these attacks and demonstrates the importance of careful evaluations that consider adversary resources and threat models.

NeurIPS 2023: What Distributions are Robust to Poisoning Attacks?

Post by Fnu Suya

Data poisoning attacks are recognized as a top concern in the industry [1]. We focus on conventional indiscriminate data poisoning attacks, where an adversary injects a few crafted examples into the training data with the goal of increasing the test error of the induced model. Despite recent advances, indiscriminate poisoning attacks on large neural networks remain challenging [2]. In this work (to be presented at NeurIPS 2023), we revisit the vulnerabilities of more extensively studied linear models under indiscriminate poisoning attacks.

Adjectives Can Reveal Gender Biases Within NLP Models

Post by Jason Briegel and Hannah Chen

Because NLP models are trained with human corpora (and now, increasingly on text generated by other NLP models that were originally trained on human language), they are prone to inheriting common human stereotypes and biases. This is problematic, because with their growing prominence they may further propagate these stereotypes (Sun et al., 2019). For example, interest is growing in mitigating bias in the field of machine translation, where systems such as Google translate were observed to default to translating gender-neutral pronouns as male pronouns, even with feminine cues (Savoldi et al., 2021).

Congratulations, Dr. Suya!

Congratulations to Fnu Suya for successfully defending his PhD thesis!

Suya will join the Unversity of Maryland as a MC2 Postdoctoral Fellow at the Maryland Cybersecurity Center this fall.

Current machine learning models require large amounts of labeled training data, which are often collected from untrusted sources. Models trained on these potentially manipulated data points are prone to data poisoning attacks. My research aims to gain a deeper understanding on the limits of two types of data poisoning attacks: indiscriminate poisoning attacks, where the attacker aims to increase the test error on the entire dataset; and subpopulation poisoning attacks, where the attacker aims to increase the test error on a defined subset of the distribution. We first present an empirical poisoning attack that encodes the attack objectives into target models and then generates poisoning points that induce the target models (and hence the encoded objectives) with provable convergence. This attack achieves state-of-the-art performance for a diverse set of attack objectives and quantifies a lower bound to the performance of best possible poisoning attacks. In the broader sense, because the attack guarantees convergence to the target model which encodes the desired attack objective, our attack can also be applied to objectives related to other trustworthy aspects (e.g., privacy, fairness) of machine learning.

SoK: Let the Privacy Games Begin! A Unified Treatment of Data Inference Privacy in Machine Learning

Our paper on the use of cryptographic-style games to model inference privacy is published in IEEE Symposium on Security and Privacy (Oakland):

Giovanni Cherubin, , Boris Köpf, Andrew Paverd, Anshuman Suri, Shruti Tople, and Santiago Zanella-Béguelin. SoK: Let the Privacy Games Begin! A Unified Treatment of Data Inference Privacy in Machine Learning. IEEE Symposium on Security and Privacy, 2023. [Arxiv]

Tired of diverse definitions of machine learning privacy risks? Curious about game-based definitions? In our paper, we present privacy games as a tool for describing and analyzing privacy risks in machine learning. Join us on May 22nd, 11 AM @IEEESSP '23 https://t.co/NbRuTmHyd2 pic.twitter.com/CIzsT7UY4b

CVPR 2023: Manipulating Transfer Learning for Property Inference

Manipulating Transfer Learning for Property Inference

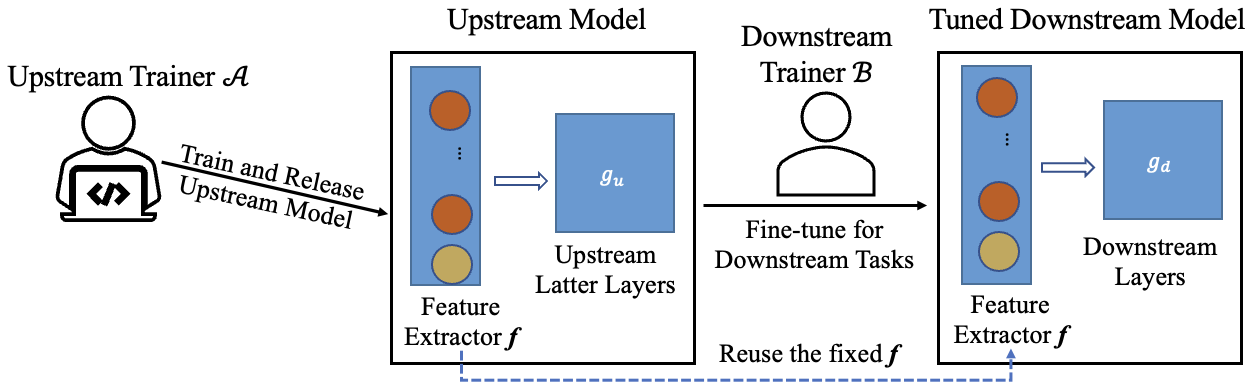

Transfer learning is a popular method to train deep learning models efficiently. By reusing parameters from upstream pre-trained models, the downstream trainer can use fewer computing resources to train downstream models, compared to training models from scratch.

The figure below shows the typical process of transfer learning for vision tasks:

However, the nature of transfer learning can be exploited by a malicious upstream trainer, leading to severe risks to the downstream trainer.

MICO Challenge in Membership Inference

Anshuman Suri wrote up an interesting post on his experience with the MICO Challenge, a membership inference competition that was part of SaTML. Anshuman placed second in the competition (on the CIFAR data set), where the metric is highest true positive rate at a 0.1 false positive rate over a set of models (some trained using differential privacy and some without).

Anshuman’s post describes the methods he used and his experience in the competition: My submission to the MICO Challenge.

Voice of America interview on ChatGPT

I was interviewed for a Voice of America story (in Russian) on the impact of chatGPT and similar tools.

Full story: https://youtu.be/dFuunAFX9y4

Uh-oh, there's a new way to poison code models

Jack Clark’s Import AI, 16 Jan 2023 includes a nice description of our work on TrojanPuzzle:

####################################################

Uh-oh, there's a new way to poison code models - and it's really hard to detect:

…TROJANPUZZLE is a clever way to trick your code model into betraying you - if you can poison the undelrying dataset…

Researchers with the University of California, Santa Barbara, Microsoft Corporation, and the University of Virginia have come up with some clever, subtle ways to poison the datasets used to train code models. The idea is that by selectively altering certain bits of code, they can increase the likelihood of generative models trained on that code outputting buggy stuff.Trojan Puzzle attack trains AI assistants into suggesting malicious code

Bleeping Computer has a story on our work (in collaboration with Microsoft Research) on poisoning code suggestion models:

Trojan Puzzle attack trains AI assistants into suggesting malicious code

By Bill Toulas

Researchers at the universities of California, Virginia, and Microsoft have devised a new poisoning attack that could trick AI-based coding assistants into suggesting dangerous code.

Named ‘Trojan Puzzle,’ the attack stands out for bypassing static detection and signature-based dataset cleansing models, resulting in the AI models being trained to learn how to reproduce dangerous payloads.