SoK: Let the Privacy Games Begin! A Unified Treatment of Data Inference Privacy in Machine Learning

Our paper on the use of cryptographic-style games to model inference privacy is published in IEEE Symposium on Security and Privacy (Oakland):

Giovanni Cherubin, , Boris Köpf, Andrew Paverd, Anshuman Suri, Shruti Tople, and Santiago Zanella-Béguelin. SoK: Let the Privacy Games Begin! A Unified Treatment of Data Inference Privacy in Machine Learning. IEEE Symposium on Security and Privacy, 2023. [Arxiv]

Tired of diverse definitions of machine learning privacy risks? Curious about game-based definitions? In our paper, we present privacy games as a tool for describing and analyzing privacy risks in machine learning. Join us on May 22nd, 11 AM @IEEESSP '23 https://t.co/NbRuTmHyd2 pic.twitter.com/CIzsT7UY4b

CVPR 2023: Manipulating Transfer Learning for Property Inference

Manipulating Transfer Learning for Property Inference

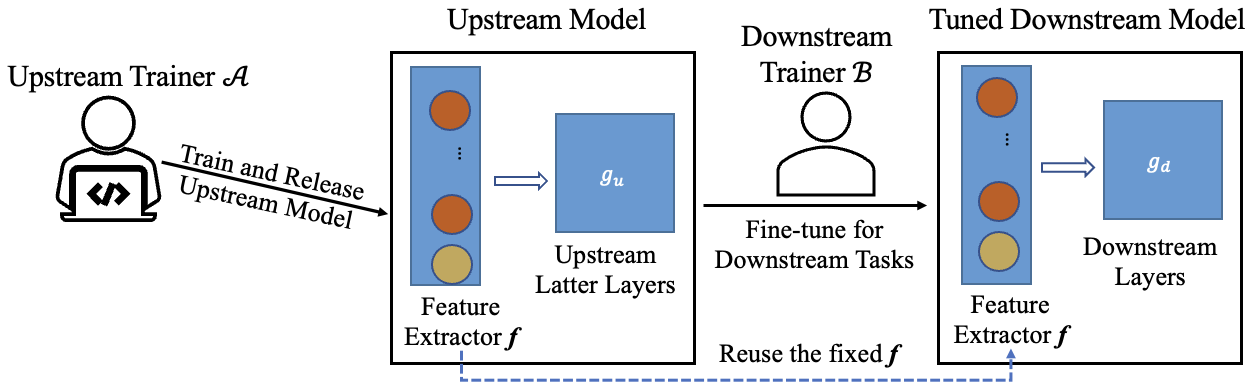

Transfer learning is a popular method to train deep learning models efficiently. By reusing parameters from upstream pre-trained models, the downstream trainer can use fewer computing resources to train downstream models, compared to training models from scratch.

The figure below shows the typical process of transfer learning for vision tasks:

However, the nature of transfer learning can be exploited by a malicious upstream trainer, leading to severe risks to the downstream trainer.

MICO Challenge in Membership Inference

Anshuman Suri wrote up an interesting post on his experience with the MICO Challenge, a membership inference competition that was part of SaTML. Anshuman placed second in the competition (on the CIFAR data set), where the metric is highest true positive rate at a 0.1 false positive rate over a set of models (some trained using differential privacy and some without).

Anshuman’s post describes the methods he used and his experience in the competition: My submission to the MICO Challenge.

Voice of America interview on ChatGPT

I was interviewed for a Voice of America story (in Russian) on the impact of chatGPT and similar tools.

Full story: https://youtu.be/dFuunAFX9y4

Uh-oh, there's a new way to poison code models

Jack Clark’s Import AI, 16 Jan 2023 includes a nice description of our work on TrojanPuzzle:

####################################################

Uh-oh, there's a new way to poison code models - and it's really hard to detect:

…TROJANPUZZLE is a clever way to trick your code model into betraying you - if you can poison the undelrying dataset…

Researchers with the University of California, Santa Barbara, Microsoft Corporation, and the University of Virginia have come up with some clever, subtle ways to poison the datasets used to train code models. The idea is that by selectively altering certain bits of code, they can increase the likelihood of generative models trained on that code outputting buggy stuff.Trojan Puzzle attack trains AI assistants into suggesting malicious code

Bleeping Computer has a story on our work (in collaboration with Microsoft Research) on poisoning code suggestion models:

Trojan Puzzle attack trains AI assistants into suggesting malicious code

By Bill Toulas

Researchers at the universities of California, Virginia, and Microsoft have devised a new poisoning attack that could trick AI-based coding assistants into suggesting dangerous code.

Named ‘Trojan Puzzle,’ the attack stands out for bypassing static detection and signature-based dataset cleansing models, resulting in the AI models being trained to learn how to reproduce dangerous payloads.

Best Submission Award at VISxAI 2022

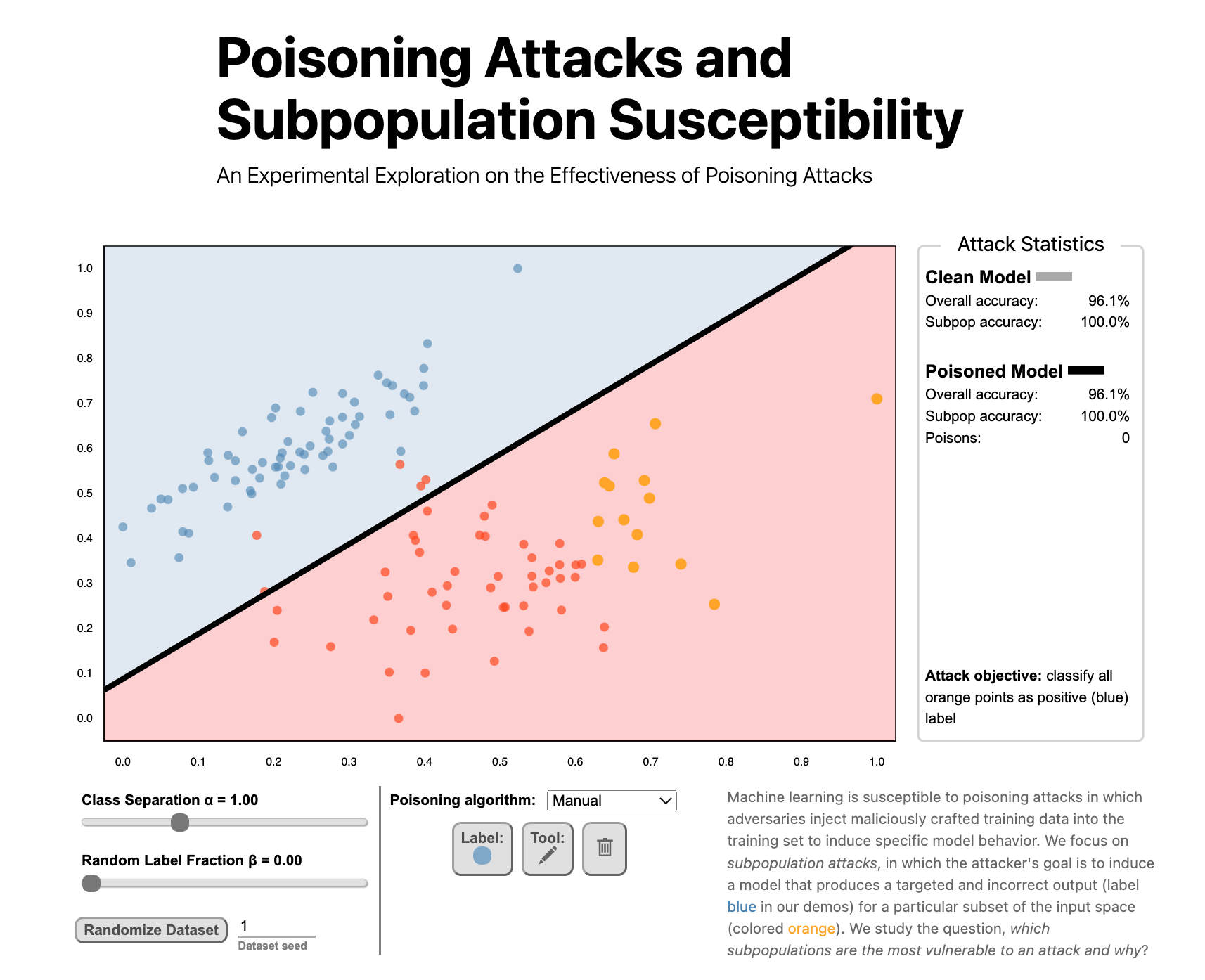

Poisoning Attacks and Subpopulation Susceptibility by Evan Rose, Fnu Suya, and David Evans won the Best Submission Award at the 5th Workshop on Visualization for AI Explainability.

Undergraduate student Evan Rose led the work and presented it at VISxAI in Oklahoma City, 17 October 2022.

Congratulations to #VISxAI's Best Submission Awards:

— VISxAI (@VISxAI) October 17, 2022

🏆 K-Means Clustering: An Explorable Explainer by @yizhe_ang https://t.co/BULW33WPzo

🏆 Poisoning Attacks and Subpopulation Susceptibility by Evan Rose, @suyafnu, and @UdacityDave https://t.co/Z12D3PvfXu#ieeevisNext up is best submission award 🏅 winner, "Poisoning Attacks and Subpopulation Susceptibility" by Evan Rose, @suyafnu, and @UdacityDave.

Tune in to learn why some data subpopulations are more vulnerable to attacks than others!https://t.co/Z12D3PvfXu#ieeevis #VISxAI pic.twitter.com/Gm2JBpWQSPVisualizing Poisoning

How does a poisoning attack work and why are some groups more susceptible to being victimized by a poisoning attack?

We’ve posted work that helps understand how poisoning attacks work with some engaging visualizations:

Poisoning Attacks and Subpopulation Susceptibility

An Experimental Exploration on the Effectiveness of Poisoning Attacks

Evan Rose, Fnu Suya, and David Evans

Follow the link to try the interactive version!Machine learning is susceptible to poisoning attacks in which adversaries inject maliciously crafted training data into the training set to induce specific model behavior. We focus on subpopulation attacks, in which the attacker’s goal is to induce a model that produces a targeted and incorrect output (label blue in our demos) for a particular subset of the input space (colored orange). We study the question, which subpopulations are the most vulnerable to an attack and why?

Congratulations, Dr. Zhang!

Congratulations to Xiao Zhang for successfully defending his PhD thesis!

Dr. Zhang and his PhD committee: Somesh Jha (University of Wisconsin), David Evans, Tom Fletcher; Tianxi Li (UVA Statistics), David Wu (UT Austin), Mohammad Mahmoody; Xiao Zhang.

Dr. Zhang and his PhD committee: Somesh Jha (University of Wisconsin), David Evans, Tom Fletcher; Tianxi Li (UVA Statistics), David Wu (UT Austin), Mohammad Mahmoody; Xiao Zhang.Xiao will join the CISPA Helmholtz Center for Information Security in Saarbrücken, Germany this fall as a tenure-track faculty member.

From Characterizing Intrinsic Robustness to Adversarially Robust Machine Learning The prevalence of adversarial examples raises questions about the reliability of machine learning systems, especially for their deployment in critical applications. Numerous defense mechanisms have been proposed that aim to improve a machine learning system’s robustness in the presence of adversarial examples. However, none of these methods are able to produce satisfactorily robust models, even for simple classification tasks on benchmarks. In addition to empirical attempts to build robust models, recent studies have identified intrinsic limitations for robust learning against adversarial examples. My research aims to gain a deeper understanding of why machine learning models fail in the presence of adversaries and design ways to build better robust systems. In this dissertation, I develop a concentration estimation framework to characterize the intrinsic limits of robustness for typical classification tasks of interest. The proposed framework leads to the discovery that compared with the concentration of measure which was previously argued to be an important factor, the existence of uncertain inputs may explain more fundamentally the vulnerability of state-of-the-art defenses. Moreover, to further advance our understanding of adversarial examples, I introduce a notion of representation robustness based on mutual information, which is shown to be related to an intrinsic limit of model robustness for downstream classification tasks. Finally in this dissertation, I advocate for a need to rethink the current design goal of robustness and shed light on ways to build better robust machine learning systems, potentially escaping the intrinsic limits of robustness.

ICLR 2022: Understanding Intrinsic Robustness Using Label Uncertainty

(Blog post written by Xiao Zhang)

Motivated by the empirical hardness of developing robust classifiers against adversarial perturbations, researchers began asking the question “Does there even exist a robust classifier?”. This is formulated as the intrinsic robustness problem (Mahloujifar et al., 2019), where the goal is to characterize the maximum adversarial robustness possible for a given robust classification problem. Building upon the connection between adversarial robustness and classifier’s error region, it has been shown that if we restrict the search to the set of imperfect classifiers, the intrinsic robustness problem can be reduced to the concentration of measure problem.