Poisoning LLMs

I’m quoted in this story by Rob Lemos about poisoning code models (the CodeBreaker paper in USENIX Security 2024 by Shenao Yan, Shen Wang, Yue Duan, Hanbin Hong, Kiho Lee, Doowon Kim, and Yuan Hong), that considers a similar threat to our TrojanPuzzle work:

Researchers Highlight How Poisoned LLMs Can Suggest Vulnerable Code

Dark Reading, 20 August 2024

CodeBreaker uses code transformations to create vulnerable code that continues to function as expected, but that will not be detected by major static analysis security testing. The work has improved how malicious code can be triggered, showing that more realistic attacks are possible, says David Evans, professor of computer science at the University of Virginia and one of the authors of the TrojanPuzzle paper. ... Developers can take more care as well, viewing code suggestions — whether from an AI or from the Internet — with a critical eye. In addition, developers need to know how to construct prompts to produce more secure code.Yet, developers need their own tools to detect potentially malicious code, says the University of Virginia’s Evans.

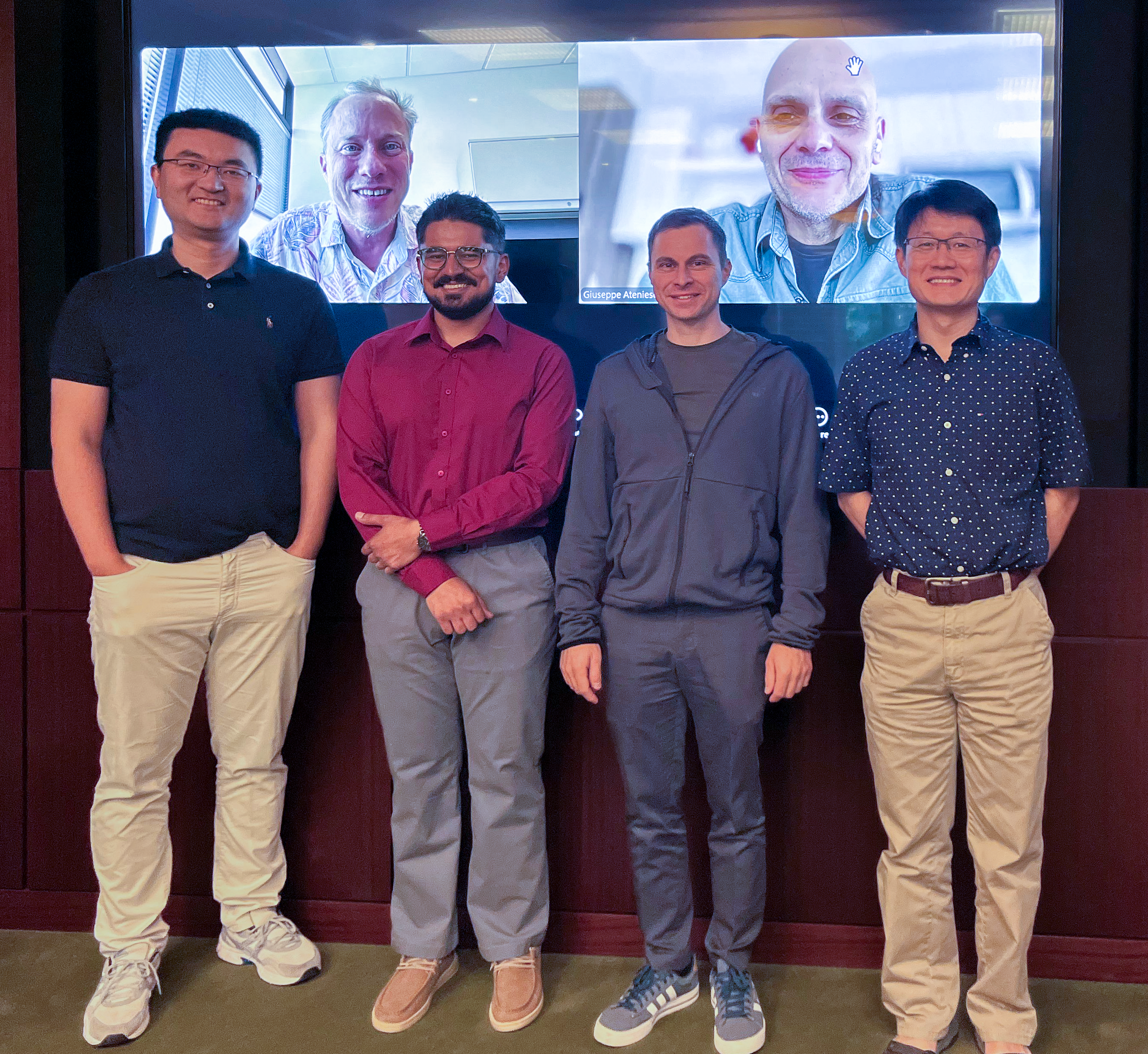

Congratulations, Dr. Suri!

Congratulations to Anshuman Suri for successfully defending his PhD thesis!

Tianhao Wang, Dr. Anshuman Suri, Nando Fioretto, Cong Shen

On Screen: David Evans, Giuseppe Ateniese

Inference Privacy in Machine Learning Using machine learning models comes at the risk of leaking information about data used in their training and deployment. This leakage can expose sensitive information about properties of the underlying data distribution, data from participating users, or even individual records in the training data. In this dissertation, we develop and evaluate novel methods to quantify and audit such information disclosure at three granularities: distribution, user, and record.

Graduation 2024

Congratulations to our two PhD graduates!

Suya will be joining the University of Tennessee at Knoxville as an Assistant Professor.

Josie will be building a medical analytics research group at Dexcom.

SaTML Talk: SoK: Pitfalls in Evaluating Black-Box Attacks

Anshuman Suri’s talk at IEEE Conference on Secure and Trustworthy Machine Learning (SaTML) is now available:

See the earlier blog post for more on the work, and the paper at https://arxiv.org/abs/2310.17534.

Congratulations, Dr. Lamp!

Tianhao Wang (Committee Chair), Miaomiao Zhang, Lu Feng (Co-Advisor), Dr. Josie Lamp, David Evans

On screen: Sula Mazimba, Rich Nguyen, Tingting Zhu

Congratulations to Josephine Lamp for successfully defending her PhD thesis!

Trustworthy Clinical Decision Support Systems for Medical Trajectories The explosion of medical sensors and wearable devices has resulted in the collection of large amounts of medical trajectories. Medical trajectories are time series that provide a nuanced look into patient conditions and their changes over time, allowing for a more fine-grained understanding of patient health. It is difficult for clinicians and patients to effectively make use of such high dimensional data, especially given the fact that there may be years or even decades worth of data per patient. Clinical Decision Support Systems (CDSS) provide summarized, filtered, and timely information to patients or clinicians to help inform medical decision-making processes. Although CDSS have shown promise for data sources such as tabular and imaging data, e.g., in electronic health records, the opportunities of CDSS using medical trajectories have not yet been realized due to challenges surrounding data use, model trust and interpretability, and privacy and legal concerns.

Do Membership Inference Attacks Work on Large Language Models?

MIMIR logo. Image credit: GPT-4 + DALL-E Membership inference attacks (MIAs) attempt to predict whether a particular datapoint is a member of a target model’s training data. Despite extensive research on traditional machine learning models, there has been limited work studying MIA on the pre-training data of large language models (LLMs).

We perform a large-scale evaluation of MIAs over a suite of language models (LMs) trained on the Pile, ranging from 160M to 12B parameters. We find that MIAs barely outperform random guessing for most settings across varying LLM sizes and domains. Our further analyses reveal that this poor performance can be attributed to (1) the combination of a large dataset and few training iterations, and (2) an inherently fuzzy boundary between members and non-members.

SoK: Pitfalls in Evaluating Black-Box Attacks

Post by Anshuman Suri and Fnu Suya

Much research has studied black-box attacks on image classifiers, where adversaries generate adversarial examples against unknown target models without having access to their internal information. Our analysis of over 164 attacks (published in 102 major security, machine learning and security conferences) shows how these works make different assumptions about the adversary’s knowledge.

The current literature lacks cohesive organization centered around the threat model. Our SoK paper (to appear at IEEE SaTML 2024) introduces a taxonomy for systematizing these attacks and demonstrates the importance of careful evaluations that consider adversary resources and threat models.

NeurIPS 2023: What Distributions are Robust to Poisoning Attacks?

Post by Fnu Suya

Data poisoning attacks are recognized as a top concern in the industry [1]. We focus on conventional indiscriminate data poisoning attacks, where an adversary injects a few crafted examples into the training data with the goal of increasing the test error of the induced model. Despite recent advances, indiscriminate poisoning attacks on large neural networks remain challenging [2]. In this work (to be presented at NeurIPS 2023), we revisit the vulnerabilities of more extensively studied linear models under indiscriminate poisoning attacks.

Adjectives Can Reveal Gender Biases Within NLP Models

Post by Jason Briegel and Hannah Chen

Because NLP models are trained with human corpora (and now, increasingly on text generated by other NLP models that were originally trained on human language), they are prone to inheriting common human stereotypes and biases. This is problematic, because with their growing prominence they may further propagate these stereotypes (Sun et al., 2019). For example, interest is growing in mitigating bias in the field of machine translation, where systems such as Google translate were observed to default to translating gender-neutral pronouns as male pronouns, even with feminine cues (Savoldi et al., 2021).

Congratulations, Dr. Suya!

Congratulations to Fnu Suya for successfully defending his PhD thesis!

Suya will join the Unversity of Maryland as a MC2 Postdoctoral Fellow at the Maryland Cybersecurity Center this fall.

On the Limits of Data Poisoning Attacks Current machine learning models require large amounts of labeled training data, which are often collected from untrusted sources. Models trained on these potentially manipulated data points are prone to data poisoning attacks. My research aims to gain a deeper understanding on the limits of two types of data poisoning attacks: indiscriminate poisoning attacks, where the attacker aims to increase the test error on the entire dataset; and subpopulation poisoning attacks, where the attacker aims to increase the test error on a defined subset of the distribution. We first present an empirical poisoning attack that encodes the attack objectives into target models and then generates poisoning points that induce the target models (and hence the encoded objectives) with provable convergence. This attack achieves state-of-the-art performance for a diverse set of attack objectives and quantifies a lower bound to the performance of best possible poisoning attacks. In the broader sense, because the attack guarantees convergence to the target model which encodes the desired attack objective, our attack can also be applied to objectives related to other trustworthy aspects (e.g., privacy, fairness) of machine learning.