De-Naming the Blog

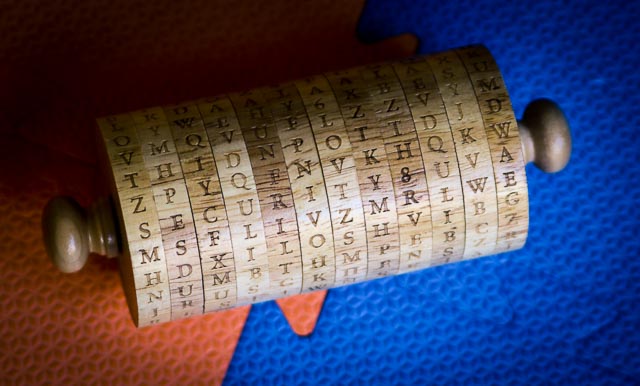

This blog was started in January 2008, a bit over eight years after I started as a professor at UVA and initiated the research group. It was named after Thomas Jefferson’s cipher wheel, which has long been (and remains) one of my favorite ways to introduce cryptography.

Figuring out how to honor our history, including Jefferson’s founding of the University, and appreciate his ideals and enormous contributions, while confronting the reality of Jefferson as a slave owner and abuser, will be a challenge and responsibility for people above my administrative rank. But, I’ve come to see that it is harmful to have a blogged named after Jefferson so have removed the Jefferson’s Wheel name from this research group blog.

Oakland Test-of-Time Awards

I chaired the committee to select Test-of-Time Awards for the IEEE Symposium on Security and Privacy symposia from 1995-2006, which were presented at the Opening Section of the 41st IEEE Symposium on Security and Privacy.

NeurIPS 2019

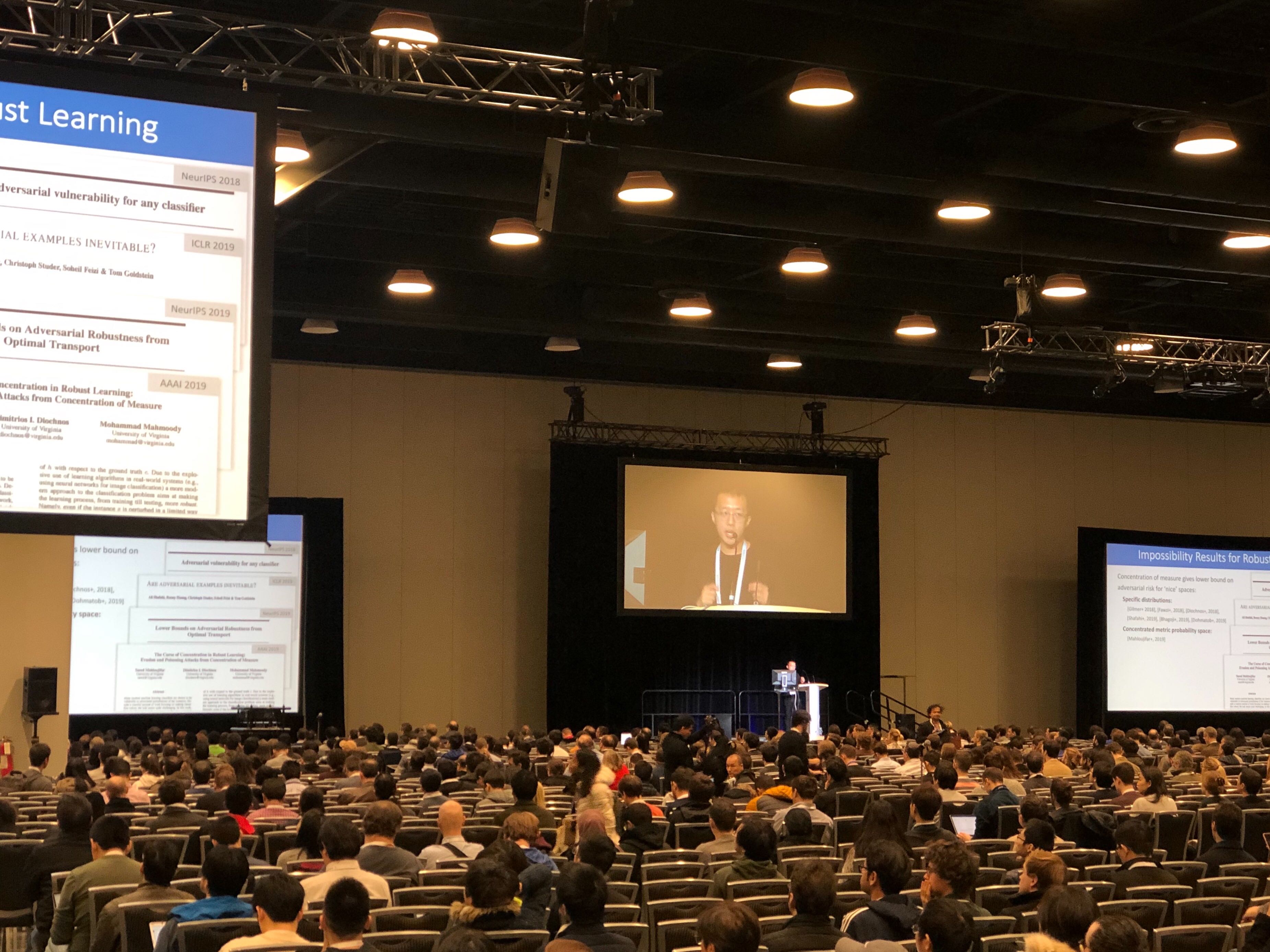

Here's a video of Xiao Zhang's presentation at NeurIPS 2019:

https://slideslive.com/38921718/track-2-session-1 (starting at 26:50)

See this post for info on the paper.

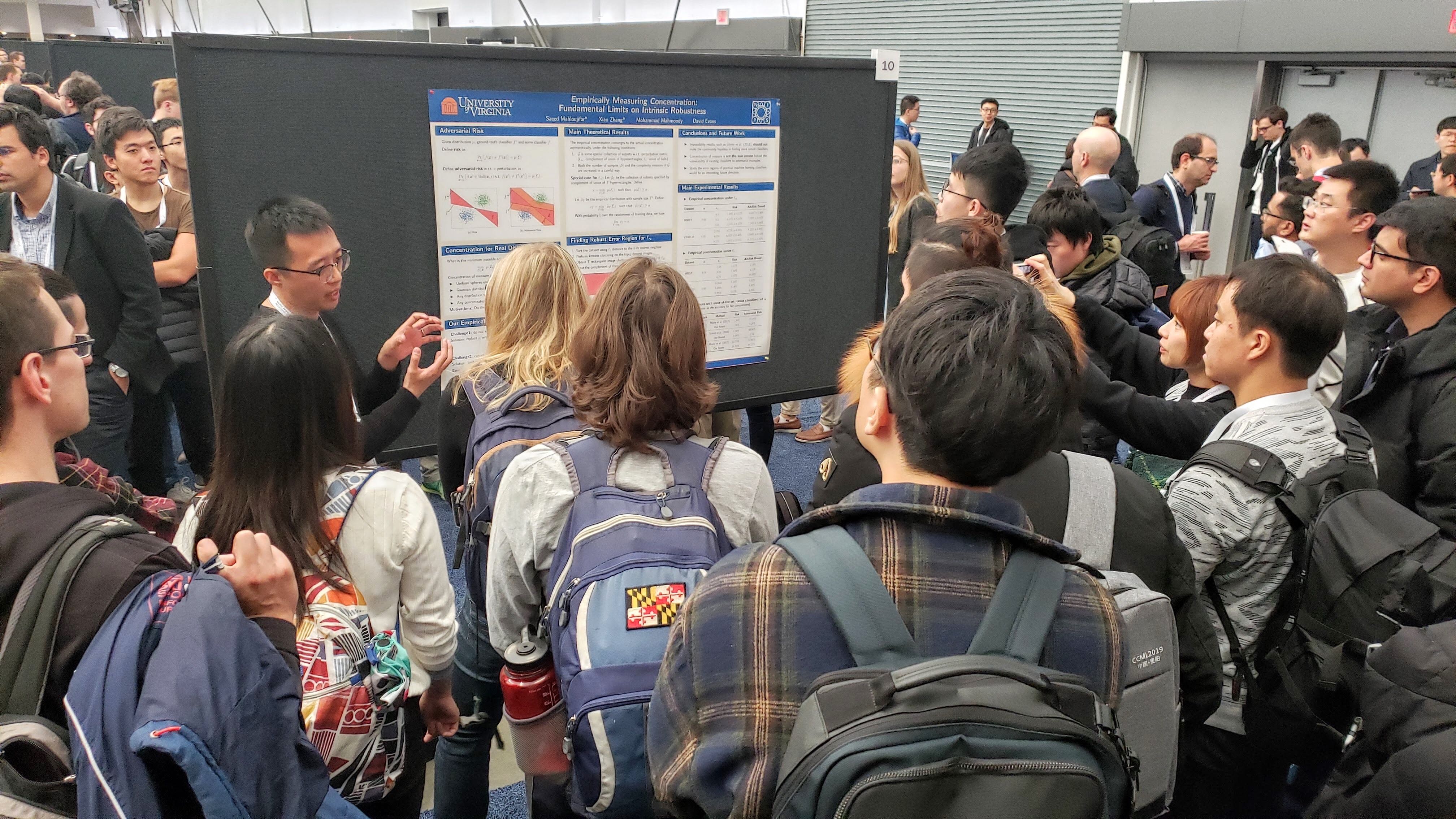

Here are a few pictures from NeurIPS 2019 (by Sicheng Zhu and Mohammad Mahmoody):

USENIX Security 2020: Hybrid Batch Attacks

New: Video Presentation

Finding Black-box Adversarial Examples with Limited Queries

Black-box attacks generate adversarial examples (AEs) against deep neural networks with only API access to the victim model.

Existing black-box attacks can be grouped into two main categories:

-

Transfer Attacks use white-box attacks on local models to find candidate adversarial examples that transfer to the target model.

-

Optimization Attacks use queries to the target model and apply optimization techniques to search for adversarial examples.

NeurIPS 2019: Empirically Measuring Concentration

Xiao Zhang will present our work (with Saeed Mahloujifar and Mohamood Mahmoody) as a spotlight at NeurIPS 2019, Vancouver, 10 December 2019.

Recent theoretical results, starting with Gilmer et al.’s Adversarial Spheres (2018), show that if inputs are drawn from a concentrated metric probability space, then adversarial examples with small perturbation are inevitable.c The key insight from this line of research is that concentration of measure gives lower bound on adversarial risk for a large collection of classifiers (e.g. imperfect classifiers with risk at least $\alpha$), which further implies the impossibility results for robust learning against adversarial examples.

White House Visit

I had a chance to visit the White House for a Roundtable on Accelerating Responsible Sharing of Federal Data. The meeting was held under “Chatham House Rules”, so I won’t mention the other participants here.

The meeting was held in the Roosevelt Room of the White House. We entered through the visitor’s side entrance. After a security gate (where you put your phone in a lockbox, so no pictures inside) with a TV blaring Fox News, there is a pleasant lobby for waiting, and then an entrance right into the Roosevelt Room. (We didn’t get to see the entrance in the opposite corner of the room, which is just a hallway across from the Oval Office.)

Jobs for Humans, 2029-2059

I was honored to particilate in a panel at an event on Adult Education in the Age of Artificial Intelligence that was run by The Great Courses as a fundraiser for the Academy of Hope, an adult public charter school in Washington, D.C.

I spoke first, following a few introductory talks, and was followed by Nicole Smith and Ellen Scully-Russ, and a keynote from Dexter Manley, Super Bowl winner with the Washington Redskins. After a short break, Kavitha Cardoza moderated a very interesting panel discussion. A recording of the talk and rest of the event is supposed to be available to Great Courses Plus subscribers.

Research Symposium Posters

Five students from our group presented posters at the department’s Fall Research Symposium:

Anshuman Suri's Overview TalkCantor's (No Longer) Lost Proof

In preparing to cover Cantor’s proof of different infinite set cardinalities (one of my all-time favorite topics!) in our theory of computation course, I found various conflicting accounts of what Cantor originally proved. So, I figured it would be easy to search the web to find the original proof.

Shockingly, at least as far as I could find1, it didn’t exist on the web! The closest I could find was in Google Books the 1892 volume of the Jähresbericht Deutsche Mathematiker-Vereinigung (which many of the references pointed to), but in fact not the first value of that journal which contains the actual proof.

FOSAD Trustworthy Machine Learning Mini-Course

I taught a mini-course on Trustworthy Machine Learning at the 19th International School on Foundations of Security Analysis and Design in Bertinoro, Italy.

Slides from my three (two-hour) lectures are posted below, along with some links to relevant papers and resources.

Class 1: Introduction/Attacks

The PDF malware evasion attack is described in this paper:

Weilin Xu, Yanjun Qi, and David Evans. Automatically Evading Classifiers: A Case Study on PDF Malware Classifiers. Network and Distributed System Security Symposium (NDSS). San Diego, CA. 21-24 February 2016. [PDF] [EvadeML.org]

Class 2: Defenses

This paper describes the feature squeezing framework: