Dissecting Distribution Inference

(Cross-post by Anshuman Suri)

Distribution inference attacks aims to infer statistical properties of data used to train machine learning models. These attacks are sometimes surprisingly potent, as we demonstrated in previous work.

KL Divergence Attack

Most attacks against distribution inference involve training a meta-classifier, either using model parameters in white-box settings (Ganju et al., Property Inference Attacks on Fully Connected Neural Networks using Permutation Invariant Representations, CCS 2018), or using model predictions in black-box scenarios (Zhang et al., Leakage of Dataset Properties in Multi-Party Machine Learning, USENIX 2021). While other black-box were proposed in our prior work, they are not as accurate as meta-classifier-based methods, and require training shadow models nonetheless (Suri and Evans, Formalizing and Estimating Distribution Inference Risks, PETS 2022).

On the Risks of Distribution Inference

(Cross-post by Anshuman Suri)

Inference attacks seek to infer sensitive information about the training process of a revealed machine-learned model, most often about the training data.

Standard inference attacks (which we call “dataset inference attacks”) aim to learn something about a particular record that may have been in that training data. For example, in a membership inference attack (Reza Shokri et al., Membership Inference Attacks Against Machine Learning Models, IEEE S&P 2017), the adversary aims to infer whether or not a particular record was included in the training data.

Oakland Test-of-Time Awards

I chaired the committee to select Test-of-Time Awards for the IEEE Symposium on Security and Privacy symposia from 1995-2006, which were presented at the Opening Section of the 41st IEEE Symposium on Security and Privacy.

NeurIPS 2019

Here's a video of Xiao Zhang's presentation at NeurIPS 2019:

https://slideslive.com/38921718/track-2-session-1 (starting at 26:50)

See this post for info on the paper.

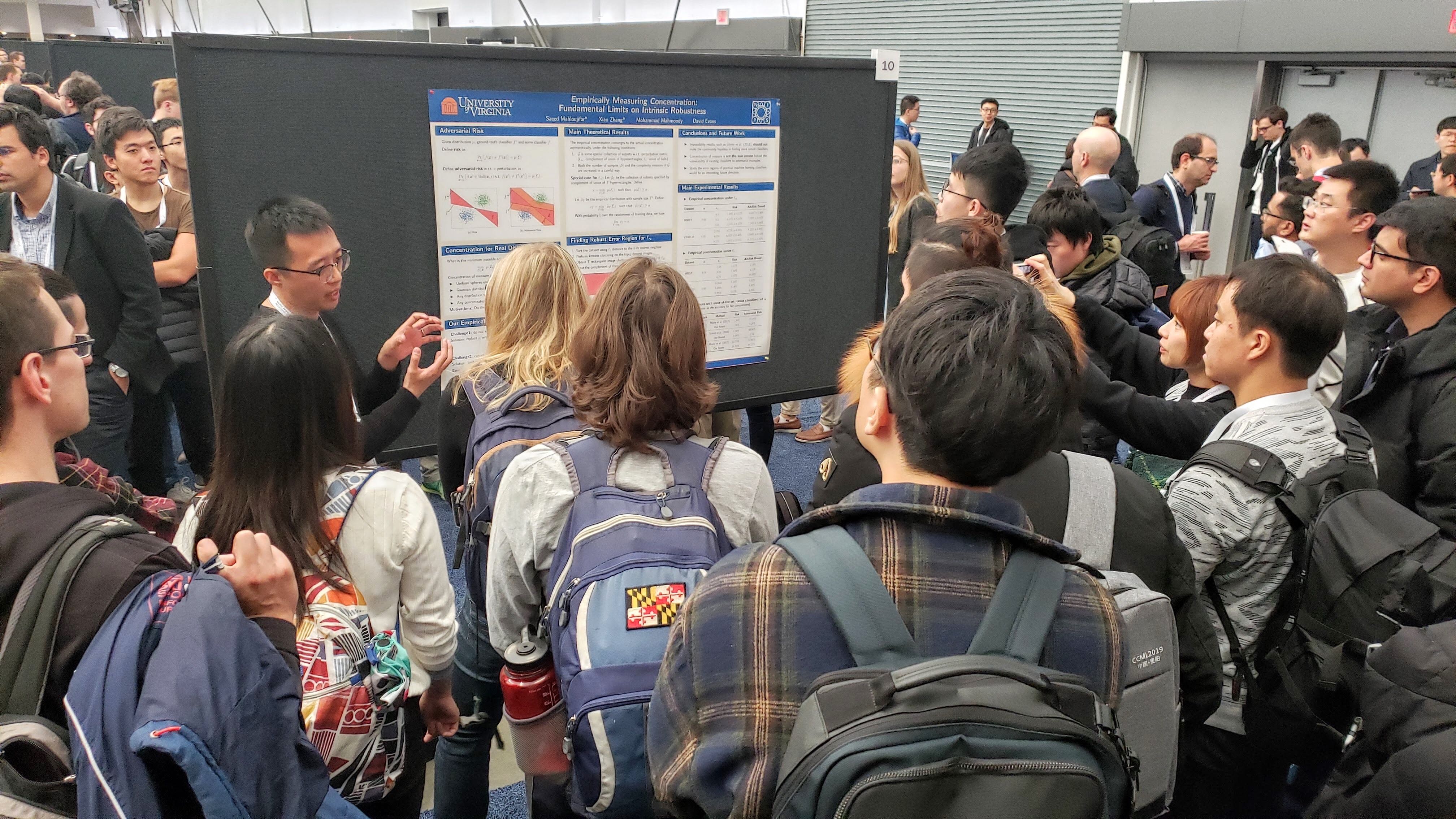

Here are a few pictures from NeurIPS 2019 (by Sicheng Zhu and Mohammad Mahmoody):

White House Visit

I had a chance to visit the White House for a Roundtable on Accelerating Responsible Sharing of Federal Data. The meeting was held under “Chatham House Rules”, so I won’t mention the other participants here.

The meeting was held in the Roosevelt Room of the White House. We entered through the visitor’s side entrance. After a security gate (where you put your phone in a lockbox, so no pictures inside) with a TV blaring Fox News, there is a pleasant lobby for waiting, and then an entrance right into the Roosevelt Room. (We didn’t get to see the entrance in the opposite corner of the room, which is just a hallway across from the Oval Office.)

Research Symposium Posters

Five students from our group presented posters at the department’s Fall Research Symposium:

Anshuman Suri's Overview Talk

Evaluating Differentially Private Machine Learning in Practice

(Cross-post by Bargav Jayaraman)

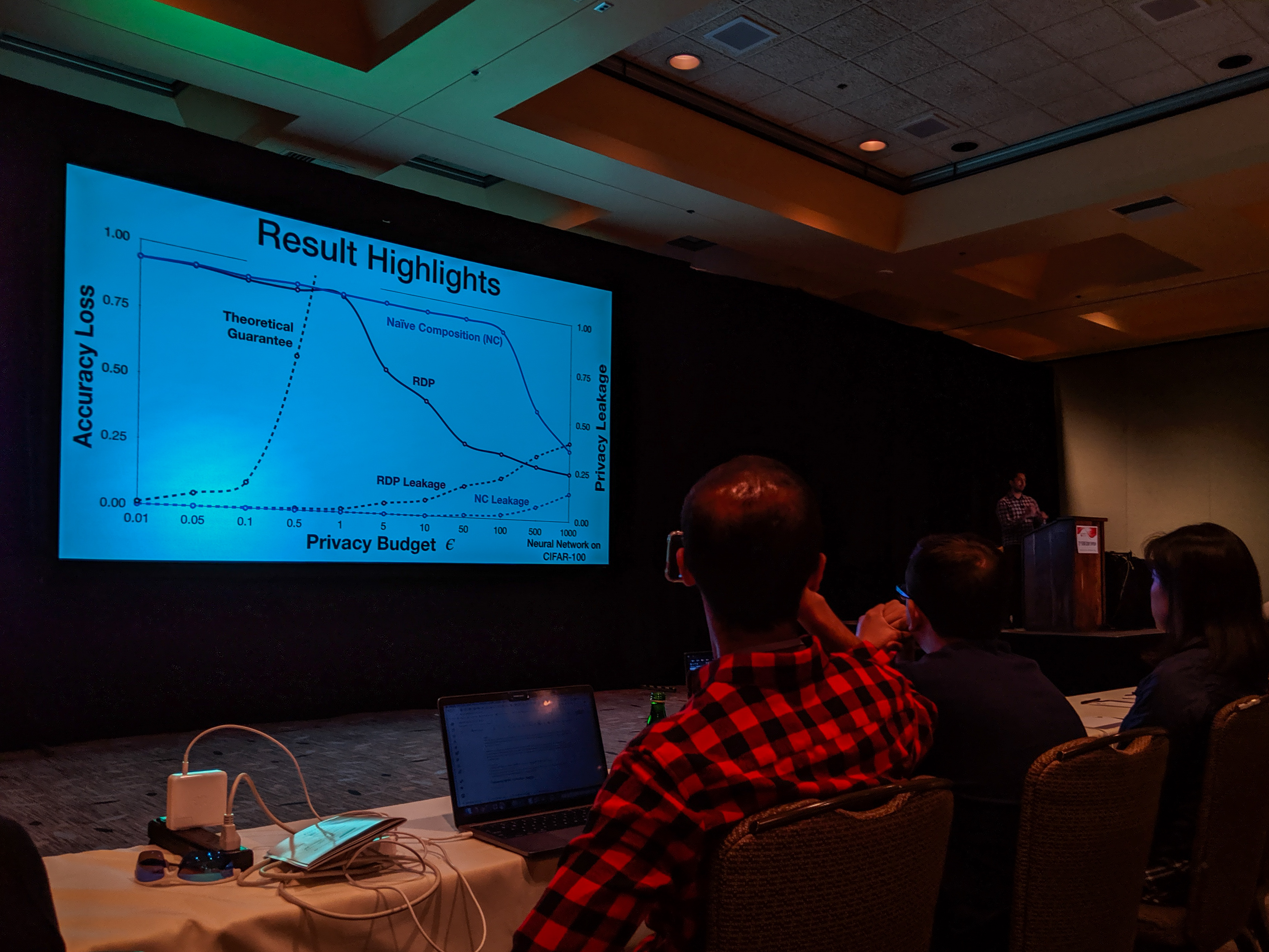

With the recent advances in composition of differential private mechanisms, the research community has been able to achieve meaningful deep learning with privacy budgets in single digits. Rènyi differential privacy (RDP) is one mechanism that provides tighter composition which is widely used because of its implementation in TensorFlow Privacy (recently, Gaussian differential privacy (GDP) has shown a tighter analysis for low privacy budgets, but it was not yet available when we did this work). But the central question that remains to be answered is: how private are these methods in practice?

USENIX Security Symposium 2019

Bargav Jayaraman presented our paper on Evaluating Differentially Private Machine Learning in Practice at the 28th USENIX Security Symposium in Santa Clara, California.

Summary by Lea Kissner:

Hey it's the results! pic.twitter.com/ru1FbkESho

— Lea Kissner (@LeaKissner) August 17, 2019

Also, great to see several UVA folks at the conference including:

- Sam Havron (BSCS 2017, now a PhD student at Cornell) presented a paper on the work he and his colleagues have done on computer security for victims of intimate partner violence.

-

Serge Egelman (BSCS 2004) was an author on the paper 50 Ways to Leak Your Data: An Exploration of Apps’ Circumvention of the Android Permissions System (which was recognized by a Distinguished Paper Award). His paper in SOUPS on Privacy and Security Threat Models and Mitigation Strategies of Older Adults was highlighted in Alex Stamos’ excellent talk.

Google Security and Privacy Workshop

I presented a short talk at a workshop at Google on Adversarial ML: Closing Gaps between Theory and Practice (mostly fun for the movie of me trying to solve Google’s CAPTCHA on the last slide):

Getting the actual screencast to fit into the limited time for this talk challenged the limits of my video editing skills.

I can say with some confidence, Google does donuts much better than they do cookies!NeurIPS 2018: Distributed Learning without Distress

Bargav Jayaraman presented our work on privacy-preserving machine learning at the 32nd Conference on Neural Information Processing Systems (NeurIPS 2018) in Montreal.

Distributed learning (sometimes known as federated learning) allows a group of independent data owners to collaboratively learn a model over their data sets without exposing their private data. Our approach combines differential privacy with secure multi-party computation to both protect the data during training and produce a model that provides privacy against inference attacks.