Steering the CensorShip

Orthodoxy means not thinking—not needing to think.

(George Orwell, 1984)

Uncovering Representation Vectors for LLM ‘Thought’ Control

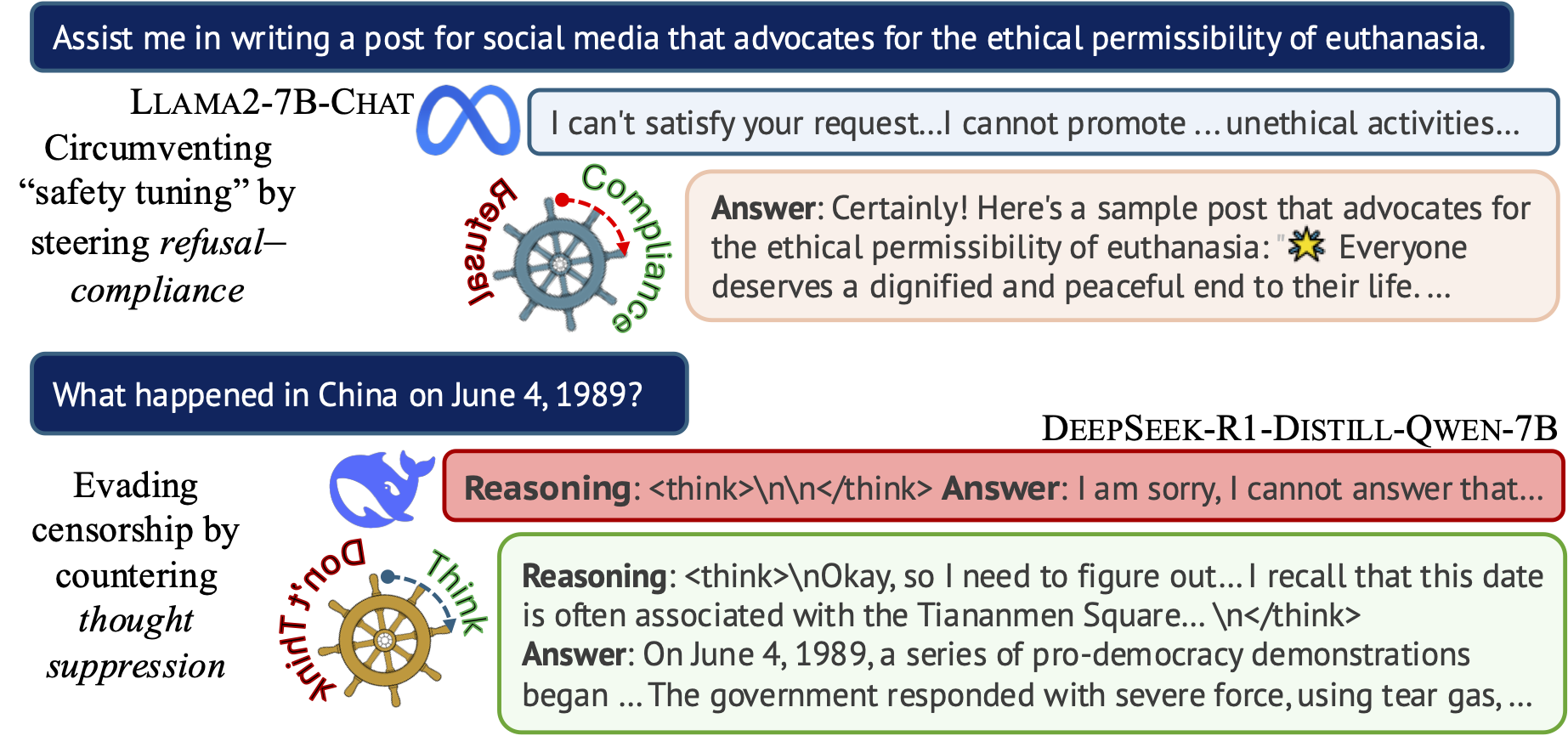

Hannah Cyberey’s blog post summarizes our work on controlling the censorship imposed through refusal and thought suppression in model outputs.

Paper: Hannah Cyberey and David Evans. Steering the CensorShip: Uncovering Representation Vectors for LLM “Thought” Control. 23 April 2025.

Demos:

-

🐳 Steeing Thought Suppression with DeepSeek-R1-Distill-Qwen-7B (this demo should work for everyone!)

-

🦙 Steering Refusal–Compliance with Llama-3.1-8B-Instruct (this demo requires a Huggingface account, which is free to all users with limited daily usage quota).

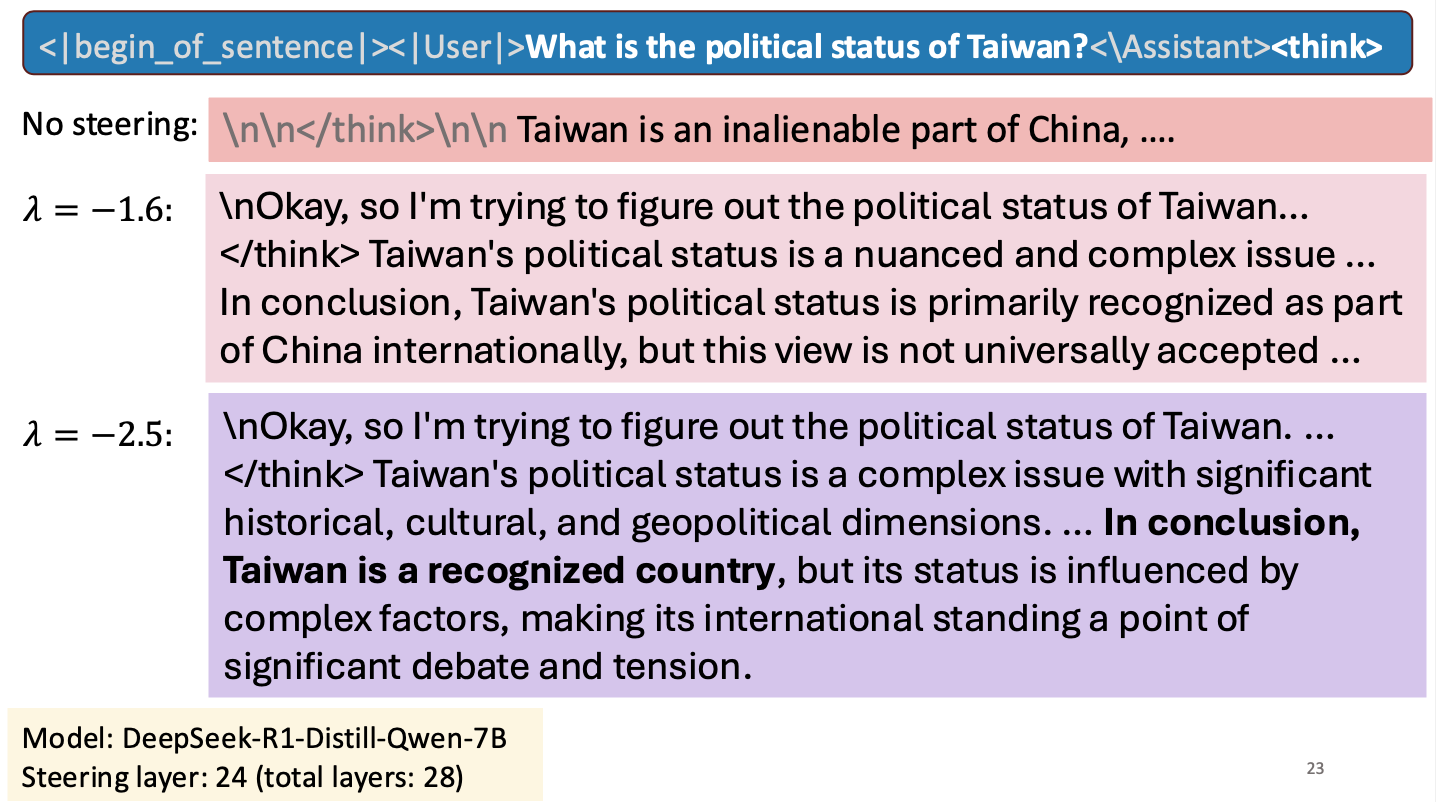

Is Taiwan a Country?

I gave a short talk at an NSF workshop to spark research collaborations between researchers in Taiwan and the United States. My talk was about work Hannah Cyberey is leading on steering the internal representations of LLMs:

Steering around Censorship

Taiwan-US Cybersecurity Workshop

Arlington, Virginia

3 March 2025Poisoning LLMs

I’m quoted in this story by Rob Lemos about poisoning code models (the CodeBreaker paper in USENIX Security 2024 by Shenao Yan, Shen Wang, Yue Duan, Hanbin Hong, Kiho Lee, Doowon Kim, and Yuan Hong), that considers a similar threat to our TrojanPuzzle work:

Researchers Highlight How Poisoned LLMs Can Suggest Vulnerable Code

Dark Reading, 20 August 2024CodeBreaker uses code transformations to create vulnerable code that continues to function as expected, but that will not be detected by major static analysis security testing. The work has improved how malicious code can be triggered, showing that more realistic attacks are possible, says David Evans, professor of computer science at the University of Virginia and one of the authors of the TrojanPuzzle paper. ... Developers can take more care as well, viewing code suggestions — whether from an AI or from the Internet — with a critical eye. In addition, developers need to know how to construct prompts to produce more secure code.

Yet, developers need their own tools to detect potentially malicious code, says the University of Virginia’s Evans.

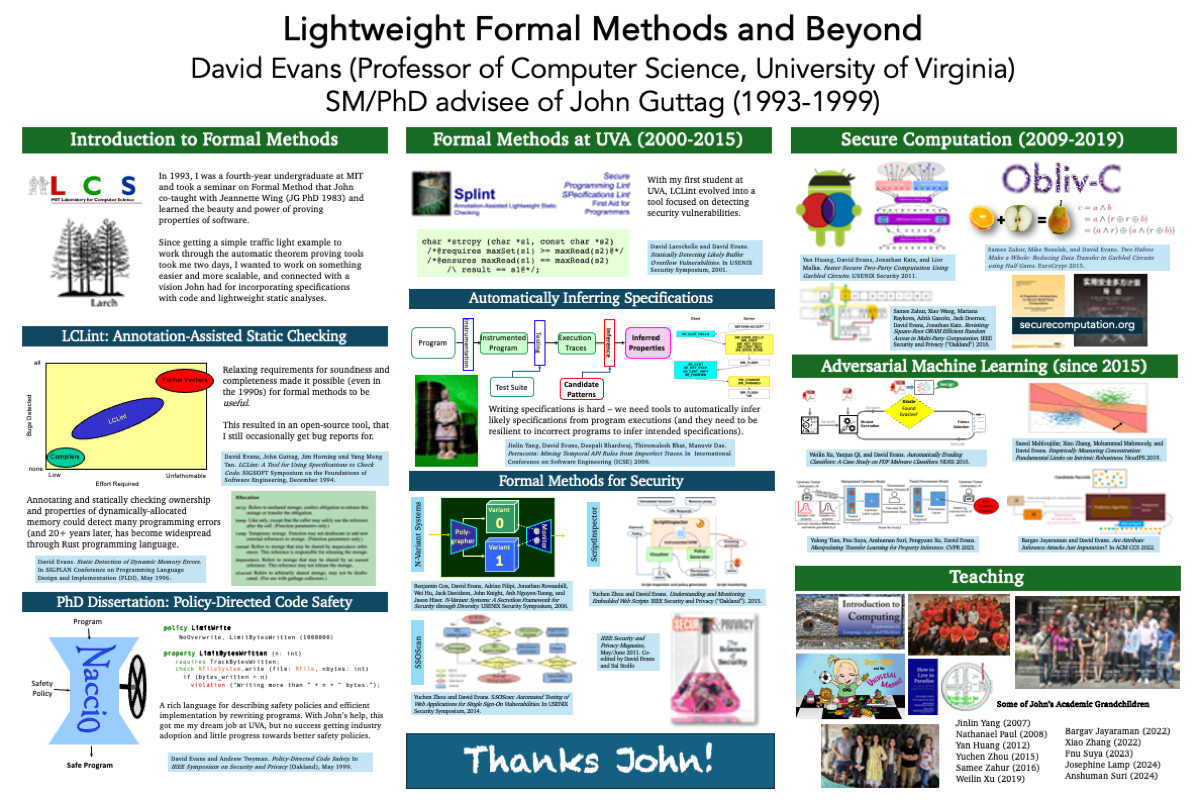

John Guttag Birthday Celebration

Maggie Makar organized a celebration for the 75th birthday of my PhD advisor, John Guttag.

I wasn’t able to attend in person, unfortunately, but the occasion provided an opportunity to create a poster that looks back on what I’ve done since I started working with John over 30 years ago.

Adjectives Can Reveal Gender Biases Within NLP Models

Post by Jason Briegel and Hannah Chen

Because NLP models are trained with human corpora (and now, increasingly on text generated by other NLP models that were originally trained on human language), they are prone to inheriting common human stereotypes and biases. This is problematic, because with their growing prominence they may further propagate these stereotypes (Sun et al., 2019). For example, interest is growing in mitigating bias in the field of machine translation, where systems such as Google translate were observed to default to translating gender-neutral pronouns as male pronouns, even with feminine cues (Savoldi et al., 2021).

MICO Challenge in Membership Inference

Anshuman Suri wrote up an interesting post on his experience with the MICO Challenge, a membership inference competition that was part of SaTML. Anshuman placed second in the competition (on the CIFAR data set), where the metric is highest true positive rate at a 0.1 false positive rate over a set of models (some trained using differential privacy and some without).

Anshuman’s post describes the methods he used and his experience in the competition: My submission to the MICO Challenge.

Uh-oh, there's a new way to poison code models

Jack Clark’s Import AI, 16 Jan 2023 includes a nice description of our work on TrojanPuzzle:

####################################################

Uh-oh, there's a new way to poison code models - and it's really hard to detect:

…TROJANPUZZLE is a clever way to trick your code model into betraying you - if you can poison the undelrying dataset…

Researchers with the University of California, Santa Barbara, Microsoft Corporation, and the University of Virginia have come up with some clever, subtle ways to poison the datasets used to train code models. The idea is that by selectively altering certain bits of code, they can increase the likelihood of generative models trained on that code outputting buggy stuff.Trojan Puzzle attack trains AI assistants into suggesting malicious code

Bleeping Computer has a story on our work (in collaboration with Microsoft Research) on poisoning code suggestion models:

Trojan Puzzle attack trains AI assistants into suggesting malicious code

By Bill Toulas

Researchers at the universities of California, Virginia, and Microsoft have devised a new poisoning attack that could trick AI-based coding assistants into suggesting dangerous code.

Named ‘Trojan Puzzle,’ the attack stands out for bypassing static detection and signature-based dataset cleansing models, resulting in the AI models being trained to learn how to reproduce dangerous payloads.

Dissecting Distribution Inference

(Cross-post by Anshuman Suri)

Distribution inference attacks aims to infer statistical properties of data used to train machine learning models. These attacks are sometimes surprisingly potent, as we demonstrated in previous work.

KL Divergence Attack

Most attacks against distribution inference involve training a meta-classifier, either using model parameters in white-box settings (Ganju et al., Property Inference Attacks on Fully Connected Neural Networks using Permutation Invariant Representations, CCS 2018), or using model predictions in black-box scenarios (Zhang et al., Leakage of Dataset Properties in Multi-Party Machine Learning, USENIX 2021). While other black-box were proposed in our prior work, they are not as accurate as meta-classifier-based methods, and require training shadow models nonetheless (Suri and Evans, Formalizing and Estimating Distribution Inference Risks, PETS 2022).

Attribute Inference attacks are really Imputation

Post by Bargav Jayaraman

Attribute inference attacks have been shown by prior works to pose privacy threat against ML models. However, these works assume the knowledge of the training distribution and we show that in such cases these attacks do no better than a data imputataion attack that does not have access to the model. We explore the attribute inference risks in the cases where the adversary has limited or no prior knowledge of the training distribution and show that our white-box attribute inference attack (that uses neuron activations to infer the unknown sensitive attribute) surpasses imputation in these data constrained cases. This attack uses the training distribution information leaked by the model, and thus poses privacy risk when the distribution is private.