Dissecting Distribution Inference

(Cross-post by Anshuman Suri)

Distribution inference attacks aims to infer statistical properties of data used to train machine learning models. These attacks are sometimes surprisingly potent, as we demonstrated in previous work.

KL Divergence Attack

Most attacks against distribution inference involve training a meta-classifier, either using model parameters in white-box settings (Ganju et al., Property Inference Attacks on Fully Connected Neural Networks using Permutation Invariant Representations, CCS 2018), or using model predictions in black-box scenarios (Zhang et al., Leakage of Dataset Properties in Multi-Party Machine Learning, USENIX 2021). While other black-box were proposed in our prior work, they are not as accurate as meta-classifier-based methods, and require training shadow models nonetheless (Suri and Evans, Formalizing and Estimating Distribution Inference Risks, PETS 2022).

Cray Distinguished Speaker: On Leaky Models and Unintended Inferences

Here’s the slides from my Cray Distinguished Speaker talk on On Leaky Models and Unintended Inferences: [PDF]

The chatGPT limerick version of my talk abstract is much better than mine:

A machine learning model, oh so grand

With data sets that it held in its hand

It performed quite well

But secrets to tell

And an adversary’s tricks it could not withstand.

Thanks to Stephen McCamant and Kangjie Lu for hosting my visit, and everyone at University of Minnesota. Also great to catch up with UVA BSCS alumn, Stephen J. Guy.

Attribute Inference attacks are really Imputation

Post by Bargav Jayaraman

Attribute inference attacks have been shown by prior works to pose privacy threat against ML models. However, these works assume the knowledge of the training distribution and we show that in such cases these attacks do no better than a data imputataion attack that does not have access to the model. We explore the attribute inference risks in the cases where the adversary has limited or no prior knowledge of the training distribution and show that our white-box attribute inference attack (that uses neuron activations to infer the unknown sensitive attribute) surpasses imputation in these data constrained cases. This attack uses the training distribution information leaked by the model, and thus poses privacy risk when the distribution is private.

Congratulations, Dr. Jayaraman!

Congratulations to Bargav Jayaraman for successfully defending his PhD thesis!

Bargav will join the Meta AI Lab in Menlo Park, CA as a post-doctoral researcher.

Machine learning models have been shown to leak sensitive information about their training data. An adversary having access to the model can infer different types of sensitive information, such as learning if a particular individual’s data is in the training set, extracting sensitive patterns like passwords in the training set, or predicting missing sensitive attribute values for partially known training records. This dissertation quantifies this privacy leakage. We explore inference attacks against machine learning models including membership inference, pattern extraction, and attribute inference. While our attacks give an empirical lower bound on the privacy leakage, we also provide a theoretical upper bound on the privacy leakage metrics. Our experiments across various real-world data sets show that the membership inference attacks can infer a subset of candidate training records with high attack precision, even in challenging cases where the adversary’s candidate set is mostly non-training records. In our pattern extraction experiments, we show that an adversary is able to recover email ids, passwords and login credentials from large transformer-based language models. Our attribute inference adversary is able to use underlying training distribution information inferred from the model to confidently identify candidate records with sensitive attribute values. We further evaluate the privacy risk implication to individuals contributing their data for model training. Our findings suggest that different subsets of individuals are vulnerable to different membership inference attacks, and that some individuals are repeatedly identified across multiple runs of an attack. For attribute inference, we find that a subset of candidate records with a sensitive attribute value are correctly predicted by our white-box attribute inference attacks but would be misclassified by an imputation attack that does not have access to the target model. We explore different defense strategies to mitigate the inference risks, including approaches that avoid model overfitting such as early stopping and differential privacy, and approaches that remove sensitive data from the training. We find that differential privacy mechanisms can thwart membership inference and pattern extraction attacks, but even differential privacy fails to mitigate the attribute inference risks since the attribute inference attack relies on the distribution information leaked by the model whereas differential privacy provides no protection against leakage of distribution statistics.

Balancing Tradeoffs between Fickleness and Obstinacy in NLP Models

Post by Hannah Chen.

Our work on balanced adversarial training looks at how to train models that are robust to two different types of adversarial examples:

Hannah Chen, Yangfeng Ji, David Evans. Balanced Adversarial Training: Balancing Tradeoffs between Fickleness and Obstinacy in NLP Models. In The 2022 Conference on Empirical Methods in Natural Language Processing (EMNLP), Abu Dhabi, 7-11 December 2022. [ArXiv]

Adversarial Examples

At the broadest level, an adversarial example is an input crafted intentionally to confuse a model. However, most work focus on the defintion as an input constructed by applying a small perturbation that preserves the ground truth label but changes model’s output (Goodfellow et al., 2015). We refer it as a fickle adversarial example. On the other hand, attackers can target an opposite objective where the inputs are made with minimal changes that change the ground truth labels but retain model’s predictions (Jacobsen et al., 2018). We refer these malicious inputs as obstinate adversarial examples.

Best Submission Award at VISxAI 2022

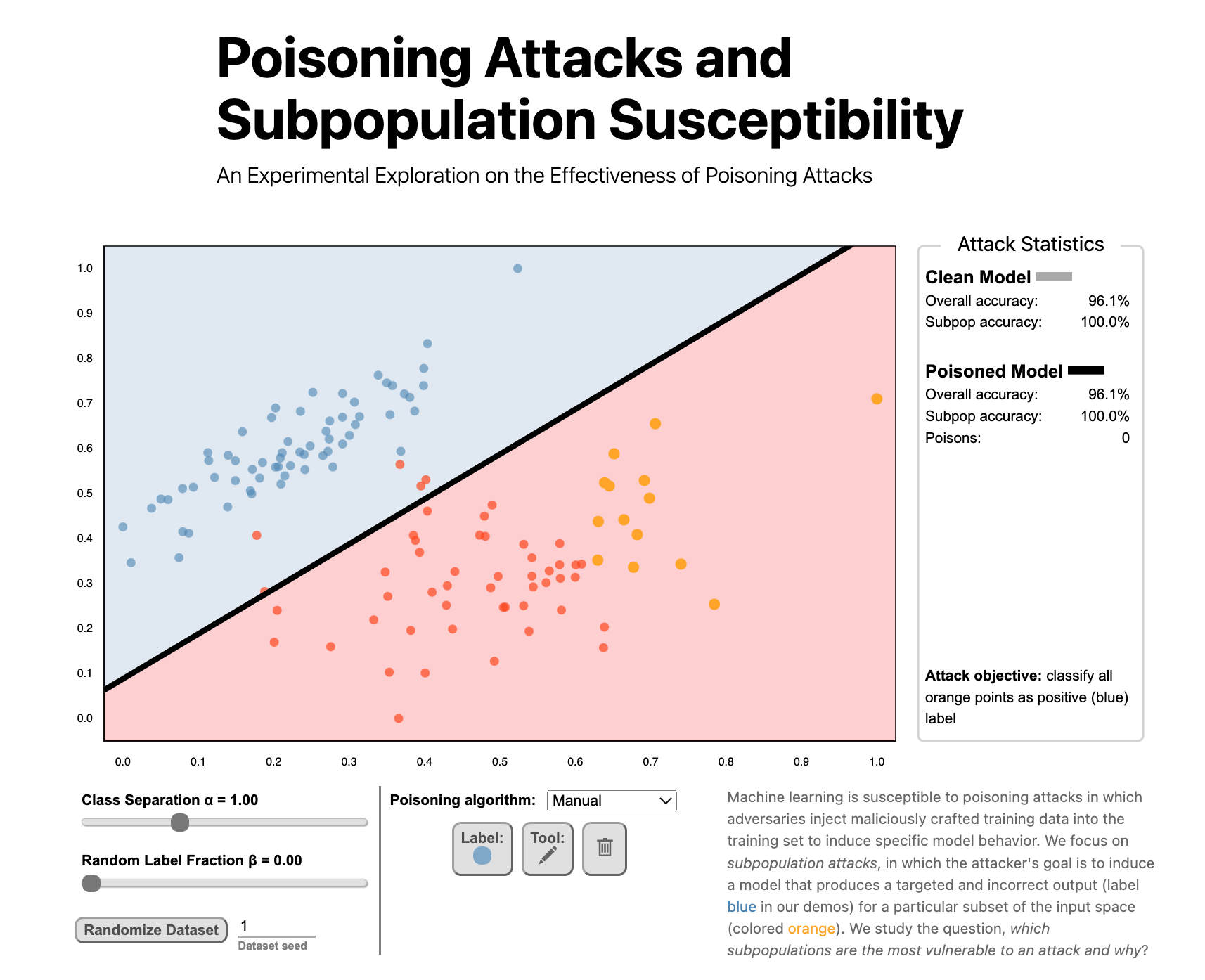

Poisoning Attacks and Subpopulation Susceptibility by Evan Rose, Fnu Suya, and David Evans won the Best Submission Award at the 5th Workshop on Visualization for AI Explainability.

Undergraduate student Evan Rose led the work and presented it at VISxAI in Oklahoma City, 17 October 2022.

Congratulations to #VISxAI's Best Submission Awards:

— VISxAI (@VISxAI) October 17, 2022

🏆 K-Means Clustering: An Explorable Explainer by @yizhe_ang https://t.co/BULW33WPzo

🏆 Poisoning Attacks and Subpopulation Susceptibility by Evan Rose, @suyafnu, and @UdacityDave https://t.co/Z12D3PvfXu#ieeevis

Next up is best submission award 🏅 winner, "Poisoning Attacks and Subpopulation Susceptibility" by Evan Rose, @suyafnu, and @UdacityDave.

Tune in to learn why some data subpopulations are more vulnerable to attacks than others!https://t.co/Z12D3PvfXu#ieeevis #VISxAI pic.twitter.com/Gm2JBpWQSPVisualizing Poisoning

How does a poisoning attack work and why are some groups more susceptible to being victimized by a poisoning attack?

We’ve posted work that helps understand how poisoning attacks work with some engaging visualizations:

Poisoning Attacks and Subpopulation Susceptibility

An Experimental Exploration on the Effectiveness of Poisoning Attacks

Evan Rose, Fnu Suya, and David Evans

Follow the link to try the interactive version!Machine learning is susceptible to poisoning attacks in which adversaries inject maliciously crafted training data into the training set to induce specific model behavior. We focus on subpopulation attacks, in which the attacker’s goal is to induce a model that produces a targeted and incorrect output (label blue in our demos) for a particular subset of the input space (colored orange). We study the question, which subpopulations are the most vulnerable to an attack and why?

BIML: What Machine Learnt Models Reveal

I gave a talk in the Berryville Institute of Machine Learning in the Barn series on What Machine Learnt Models Reveal, which is now available as an edited video:

David Evans, a professor of computer science researching security and privacy at the University of Virginia, talks about data leakage risk in ML systems and different approaches used to attack and secure models and datasets. Juxtaposing adversarial risks that target records and those aimed at attributes, David shows that differential privacy cannot capture all inference risks, and calls for more research based on privacy experiments aimed at both datasets and distributions.

Congratulations, Dr. Zhang!

Congratulations to Xiao Zhang for successfully defending his PhD thesis!

Dr. Zhang and his PhD committee: Somesh Jha (University of Wisconsin), David Evans, Tom Fletcher; Tianxi Li (UVA Statistics), David Wu (UT Austin), Mohammad Mahmoody; Xiao Zhang.

Dr. Zhang and his PhD committee: Somesh Jha (University of Wisconsin), David Evans, Tom Fletcher; Tianxi Li (UVA Statistics), David Wu (UT Austin), Mohammad Mahmoody; Xiao Zhang.Xiao will join the CISPA Helmholtz Center for Information Security in Saarbrücken, Germany this fall as a tenure-track faculty member.

From Characterizing Intrinsic Robustness to Adversarially Robust Machine Learning The prevalence of adversarial examples raises questions about the reliability of machine learning systems, especially for their deployment in critical applications. Numerous defense mechanisms have been proposed that aim to improve a machine learning system’s robustness in the presence of adversarial examples. However, none of these methods are able to produce satisfactorily robust models, even for simple classification tasks on benchmarks. In addition to empirical attempts to build robust models, recent studies have identified intrinsic limitations for robust learning against adversarial examples. My research aims to gain a deeper understanding of why machine learning models fail in the presence of adversaries and design ways to build better robust systems. In this dissertation, I develop a concentration estimation framework to characterize the intrinsic limits of robustness for typical classification tasks of interest. The proposed framework leads to the discovery that compared with the concentration of measure which was previously argued to be an important factor, the existence of uncertain inputs may explain more fundamentally the vulnerability of state-of-the-art defenses. Moreover, to further advance our understanding of adversarial examples, I introduce a notion of representation robustness based on mutual information, which is shown to be related to an intrinsic limit of model robustness for downstream classification tasks. Finally in this dissertation, I advocate for a need to rethink the current design goal of robustness and shed light on ways to build better robust machine learning systems, potentially escaping the intrinsic limits of robustness.

ICLR 2022: Understanding Intrinsic Robustness Using Label Uncertainty

(Blog post written by Xiao Zhang)

Motivated by the empirical hardness of developing robust classifiers against adversarial perturbations, researchers began asking the question “Does there even exist a robust classifier?”. This is formulated as the intrinsic robustness problem (Mahloujifar et al., 2019), where the goal is to characterize the maximum adversarial robustness possible for a given robust classification problem. Building upon the connection between adversarial robustness and classifier’s error region, it has been shown that if we restrict the search to the set of imperfect classifiers, the intrinsic robustness problem can be reduced to the concentration of measure problem.