Poisoning LLMs

I’m quoted in this story by Rob Lemos about poisoning code models (the CodeBreaker paper in USENIX Security 2024 by Shenao Yan, Shen Wang, Yue Duan, Hanbin Hong, Kiho Lee, Doowon Kim, and Yuan Hong), that considers a similar threat to our TrojanPuzzle work:

Researchers Highlight How Poisoned LLMs Can Suggest Vulnerable Code

Dark Reading, 20 August 2024

CodeBreaker uses code transformations to create vulnerable code that continues to function as expected, but that will not be detected by major static analysis security testing. The work has improved how malicious code can be triggered, showing that more realistic attacks are possible, says David Evans, professor of computer science at the University of Virginia and one of the authors of the TrojanPuzzle paper. ... Developers can take more care as well, viewing code suggestions — whether from an AI or from the Internet — with a critical eye. In addition, developers need to know how to construct prompts to produce more secure code.Yet, developers need their own tools to detect potentially malicious code, says the University of Virginia’s Evans.

The Mismeasure of Man and Models

Evaluating Allocational Harms in Large Language Models

Blog post written by Hannah Chen

Our work considers allocational harms that arise when model predictions are used to distribute scarce resources or opportunities.

Current Bias Metrics Do Not Reliably Reflect Allocation Disparities

Several methods have been proposed to audit large language models (LLMs) for bias when used in critical decision-making, such as resume screening for hiring. Yet, these methods focus on predictions, without considering how the predictions are used to make decisions. In many settings, making decisions involve prioritizing options due to limited resource constraints. We find that prediction-based evaluation methods, which measure bias as the average performance gap (δ) in prediction outcomes, do not reliably reflect disparities in allocation decision outcomes.

Google's Trail of Crumbs

Matt Stoller published my essay on Google’s decision to abandon its Privacy Sandbox Initiative in his Big newsletter:

For more technical background on this, see Minjun’s paper: Evaluating Google’s Protected Audience Protocol in PETS 2024.

Technology: US authorities survey AI ecosystem through antitrust lens

I’m quoted in this article for the International Bar Association:

Technology: US authorities survey AI ecosystem through antitrust lens

William Roberts, IBA US Correspondent

Friday 2 August 2024Antitrust authorities in the US are targeting the new frontier of artificial intelligence (AI) for potential enforcement action.…

Jonathan Kanter, Assistant Attorney General for the Antitrust Division of the DoJ, warns that the government sees ‘structures and trends in AI that should give us pause’. He says that AI relies on massive amounts of data and computing power, which can give already dominant companies a substantial advantage. ‘Powerful network and feedback effects’ may enable dominant companies to control these new markets, Kanter adds.

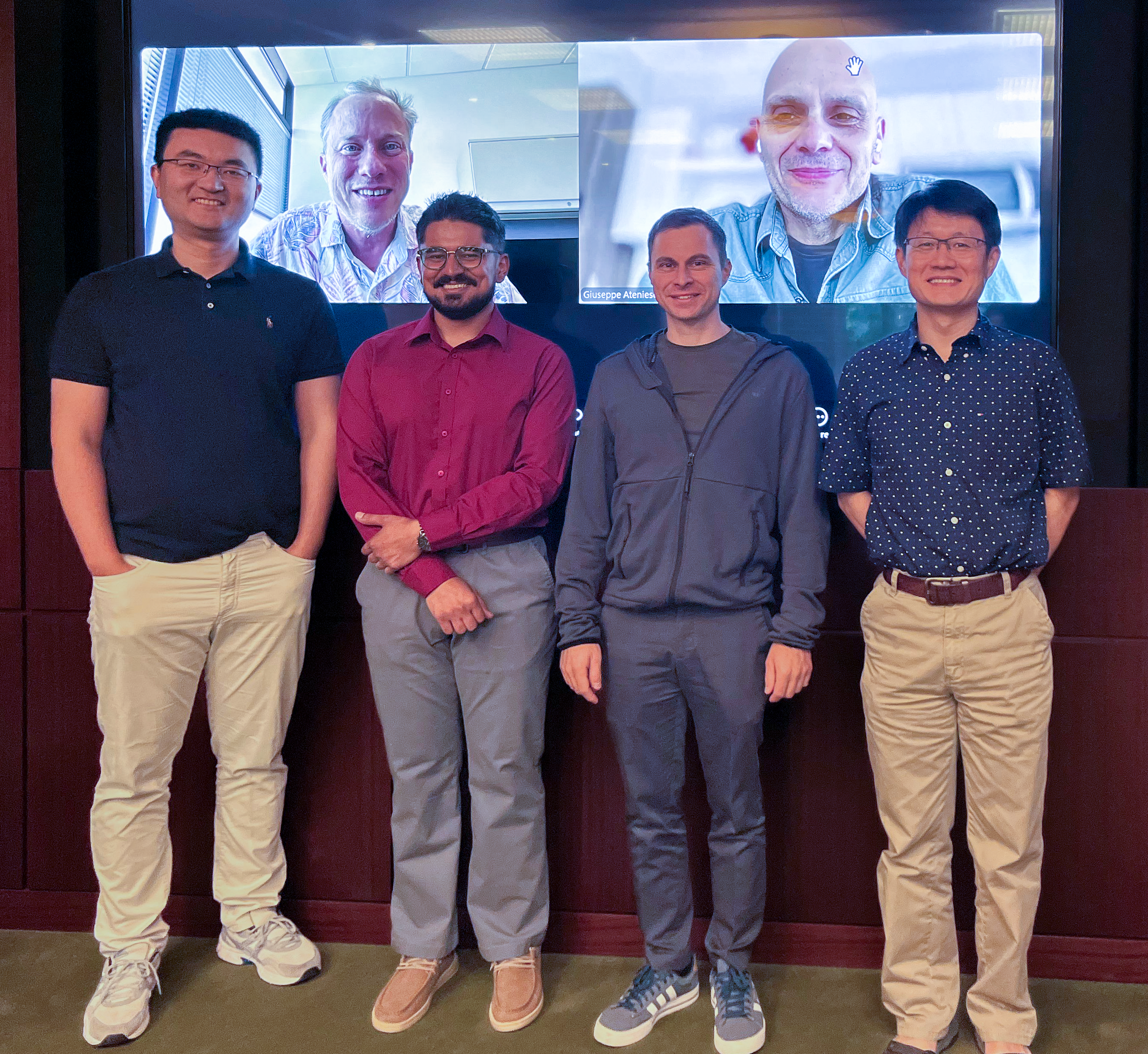

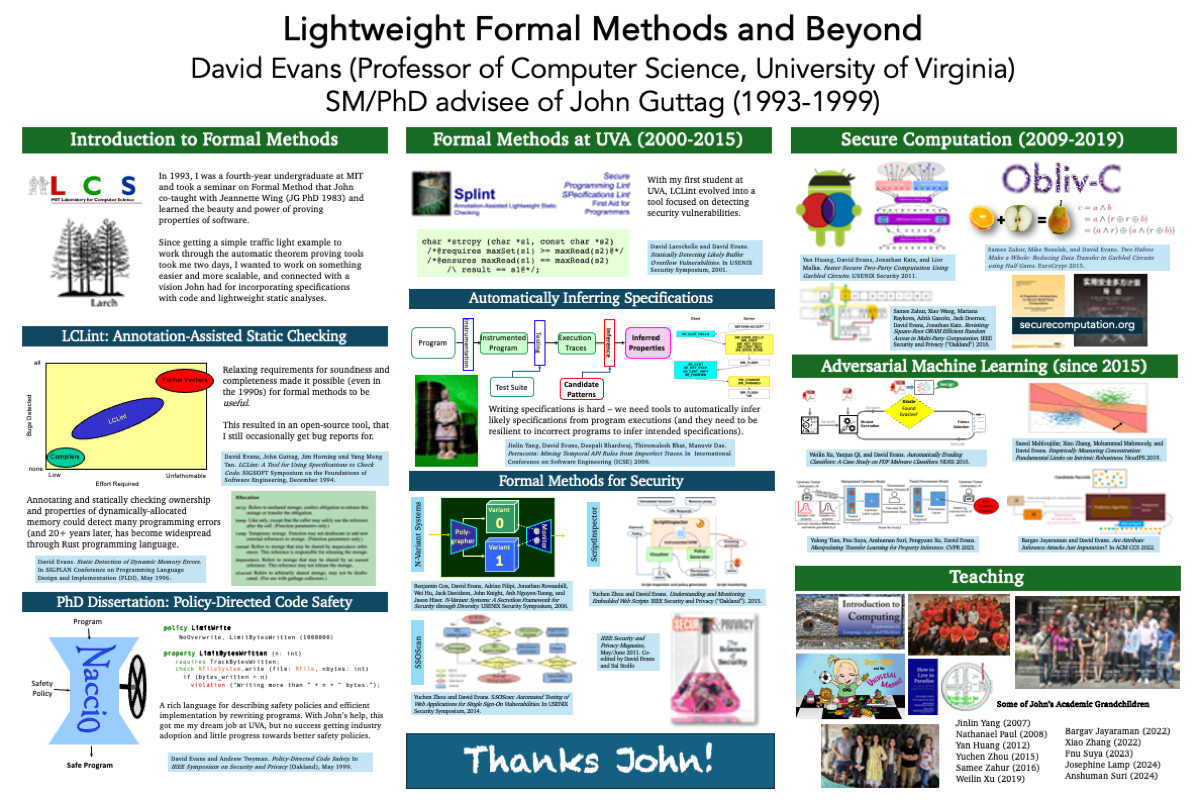

John Guttag Birthday Celebration

Maggie Makar organized a celebration for the 75th birthday of my PhD advisor, John Guttag.

I wasn’t able to attend in person, unfortunately, but the occasion provided an opportunity to create a poster that looks back on what I’ve done since I started working with John over 30 years ago.

Congratulations, Dr. Suri!

Congratulations to Anshuman Suri for successfully defending his PhD thesis!

Tianhao Wang, Dr. Anshuman Suri, Nando Fioretto, Cong Shen

On Screen: David Evans, Giuseppe Ateniese

Inference Privacy in Machine Learning Using machine learning models comes at the risk of leaking information about data used in their training and deployment. This leakage can expose sensitive information about properties of the underlying data distribution, data from participating users, or even individual records in the training data. In this dissertation, we develop and evaluate novel methods to quantify and audit such information disclosure at three granularities: distribution, user, and record.

Graduation 2024

Congratulations to our two PhD graduates!

Suya will be joining the University of Tennessee at Knoxville as an Assistant Professor.

Josie will be building a medical analytics research group at Dexcom.

SaTML Talk: SoK: Pitfalls in Evaluating Black-Box Attacks

Anshuman Suri’s talk at IEEE Conference on Secure and Trustworthy Machine Learning (SaTML) is now available:

See the earlier blog post for more on the work, and the paper at https://arxiv.org/abs/2310.17534.

Congratulations, Dr. Lamp!

Tianhao Wang (Committee Chair), Miaomiao Zhang, Lu Feng (Co-Advisor), Dr. Josie Lamp, David Evans

On screen: Sula Mazimba, Rich Nguyen, Tingting Zhu

Congratulations to Josephine Lamp for successfully defending her PhD thesis!

Trustworthy Clinical Decision Support Systems for Medical Trajectories The explosion of medical sensors and wearable devices has resulted in the collection of large amounts of medical trajectories. Medical trajectories are time series that provide a nuanced look into patient conditions and their changes over time, allowing for a more fine-grained understanding of patient health. It is difficult for clinicians and patients to effectively make use of such high dimensional data, especially given the fact that there may be years or even decades worth of data per patient. Clinical Decision Support Systems (CDSS) provide summarized, filtered, and timely information to patients or clinicians to help inform medical decision-making processes. Although CDSS have shown promise for data sources such as tabular and imaging data, e.g., in electronic health records, the opportunities of CDSS using medical trajectories have not yet been realized due to challenges surrounding data use, model trust and interpretability, and privacy and legal concerns.

Do Membership Inference Attacks Work on Large Language Models?

MIMIR logo. Image credit: GPT-4 + DALL-E Membership inference attacks (MIAs) attempt to predict whether a particular datapoint is a member of a target model’s training data. Despite extensive research on traditional machine learning models, there has been limited work studying MIA on the pre-training data of large language models (LLMs).

We perform a large-scale evaluation of MIAs over a suite of language models (LMs) trained on the Pile, ranging from 160M to 12B parameters. We find that MIAs barely outperform random guessing for most settings across varying LLM sizes and domains. Our further analyses reveal that this poor performance can be attributed to (1) the combination of a large dataset and few training iterations, and (2) an inherently fuzzy boundary between members and non-members.